Debug with Traffic Record & Override

Overview

In this quickstart, you'll learn how to debug microservices by combining traffic recording and API override capabilities. This workflow helps you understand issues, test fixes locally, and validate solutions before deploying, all without complex infrastructure.

Using the HotROD sample application, you'll learn how to:

- Record live traffic from a sandbox with a breaking change

- Inspect request and response payloads to understand the issue

- Create a local override server to test fixes surgically

- Selectively override specific endpoints while other functionality falls back to the sandbox

This hands-on guide demonstrates a real debugging workflow where a field name change breaks the location service.

Setup

Before you begin, you'll need:

- Signadot account (sign up here)

- Signadot CLI v1.3.0+ (installation instructions)

- Signadot Operator v1.2.0+ on your cluster

- A Kubernetes cluster

Option 1: Set up on your own Kubernetes cluster

Using minikube, k3s, or any other Kubernetes cluster:

- Install the Signadot Operator: Install the operator into your cluster.

- Install the HotROD Application: Install the demo app using the appropriate overlay:

- Istio

- Linkerd / No Mesh

kubectl create ns hotrod --dry-run=client -o yaml | kubectl apply -f -

kubectl -n hotrod apply -k 'https://github.com/signadot/hotrod/k8s/overlays/prod/istio'kubectl create ns hotrod --dry-run=client -o yaml | kubectl apply -f -

kubectl -n hotrod apply -k 'https://github.com/signadot/hotrod/k8s/overlays/prod/devmesh'

Option 2: Use a Playground Cluster

Alternatively, provision a Playground Cluster that comes with everything pre-installed.

Demo App: HotROD

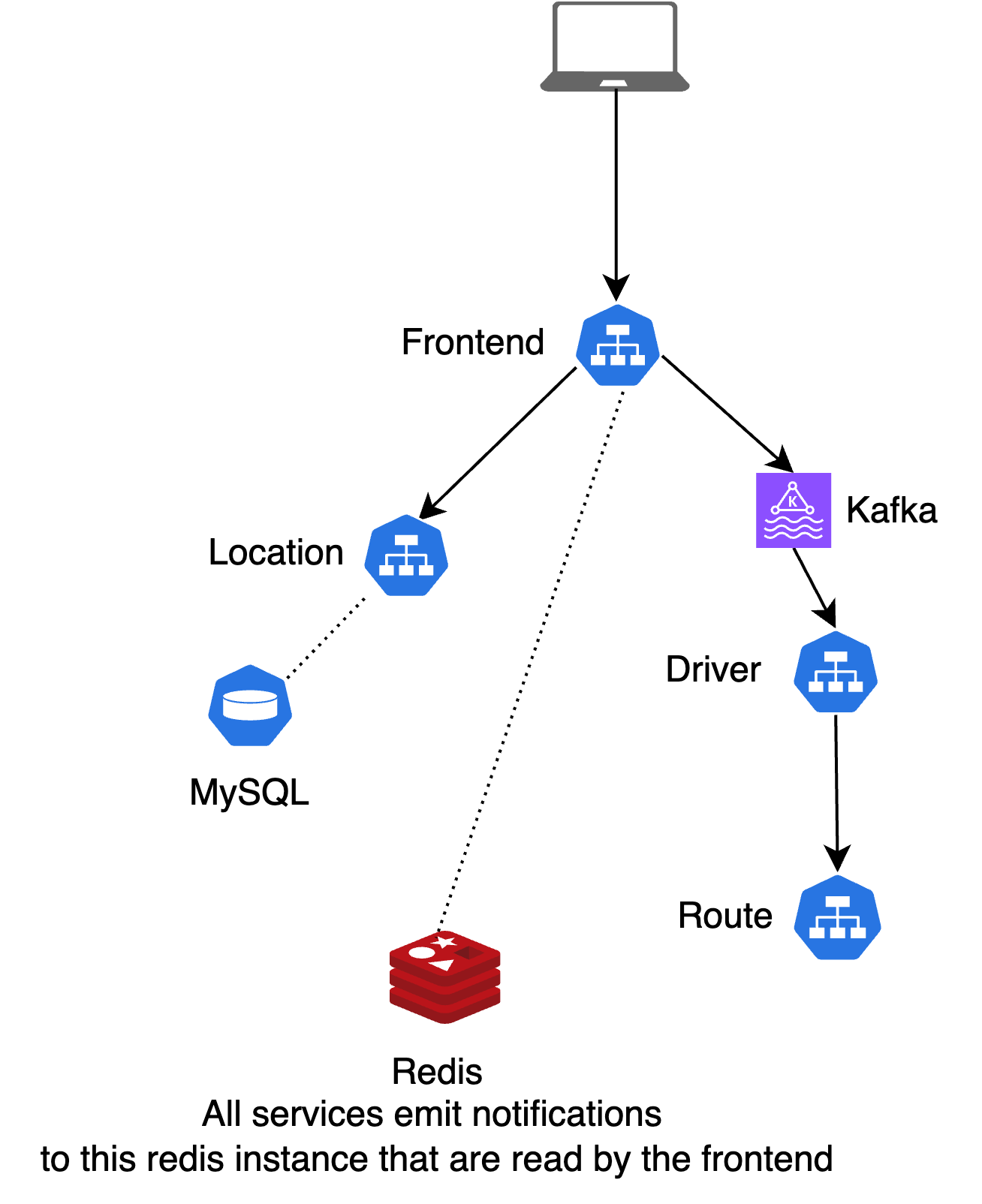

HotROD is a ride-sharing application with four microservices:

frontend: Web UI for requesting ridesdriver: Manages drivers in the systemroute: Calculates ETAslocation: Provides available pickup and dropoff locations for riders

The services use Kafka as a message queue, and Redis and MySQL for data storage.

Step 1: Configure Cluster Connectivity

Set up the infrastructure for local-to-cluster communication using the Signadot CLI. First, authenticate with your Signadot account:

signadot auth login

For more authentication options, see the CLI authentication guide. Then configure your local connection:

-

Create

$HOME/.signadot/config.yaml:local:

connections:

- cluster: <cluster> # From https://app.signadot.com/settings/clusters

type: ControlPlaneProxynoteControlPlaneProxyestablishes the connection from your machine via the Signadot control plane, making it ideal for getting started quickly. For production use, consider usingPortForwardorProxyAddressconnection types instead. These options allowsignadot localtraffic to flow directly from your workstation to/from the cluster, bypassing the Signadot control plane for improved performance and reduced latency. -

Connect to the cluster:

You'll be prompted for your password as

signadot local connectneeds root privileges for updating/etc/hostswith cluster service names and configuring networking to direct local traffic to the cluster.Connect to Cluster$signadot local connectsignadot local connect has been started ✓* runtime config: cluster demo, running with root-daemon✓ Local connection healthy!* operator version x.y.z* port-forward listening at ":59933"* localnet has been configured* 45 hosts accessible via /etc/hosts* sandboxes watcher is running* Connected Sandboxes:- No active sandbox -

Verify connectivity:

curl http://frontend.hotrod.svc:8080

Cluster services are now accessible from your local machine using their Kubernetes DNS names!

Step 2: Create a Sandbox with the Breaking Change

Pull Request #263 introduces a breaking change to the location service: it renames the Name field to FullName in the response. This breaks the frontend, which expects the original Name field. Let's create a sandbox to reproduce this issue:

name: pr-263-location

spec:

description: Location service with breaking field name change

cluster: "@{cluster}"

ttl:

duration: 1d

forks:

- forkOf:

kind: Deployment

namespace: hotrod

name: location

customizations:

images:

- image: signadot/hotrod:ee63f5381680e089fec075e9adb8f4c7c0cda38f-linux-amd64

- Run with UI

- Run with CLI

Click the button below to open and run this spec on Create Sandbox UI.

Run the below command using Signadot CLI.

# Save the sandbox spec as `pr-263-sandbox.yaml`.

# Note that <cluster> must be replaced with the name of the linked cluster in

# signadot, under https://app.signadot.com/settings/clusters.

signadot sandbox apply -f ./pr-263-sandbox.yaml --set cluster=<cluster>

Step 3: Record Traffic to Understand the Issue

Now let's record live traffic flowing through this sandbox to understand what's breaking.

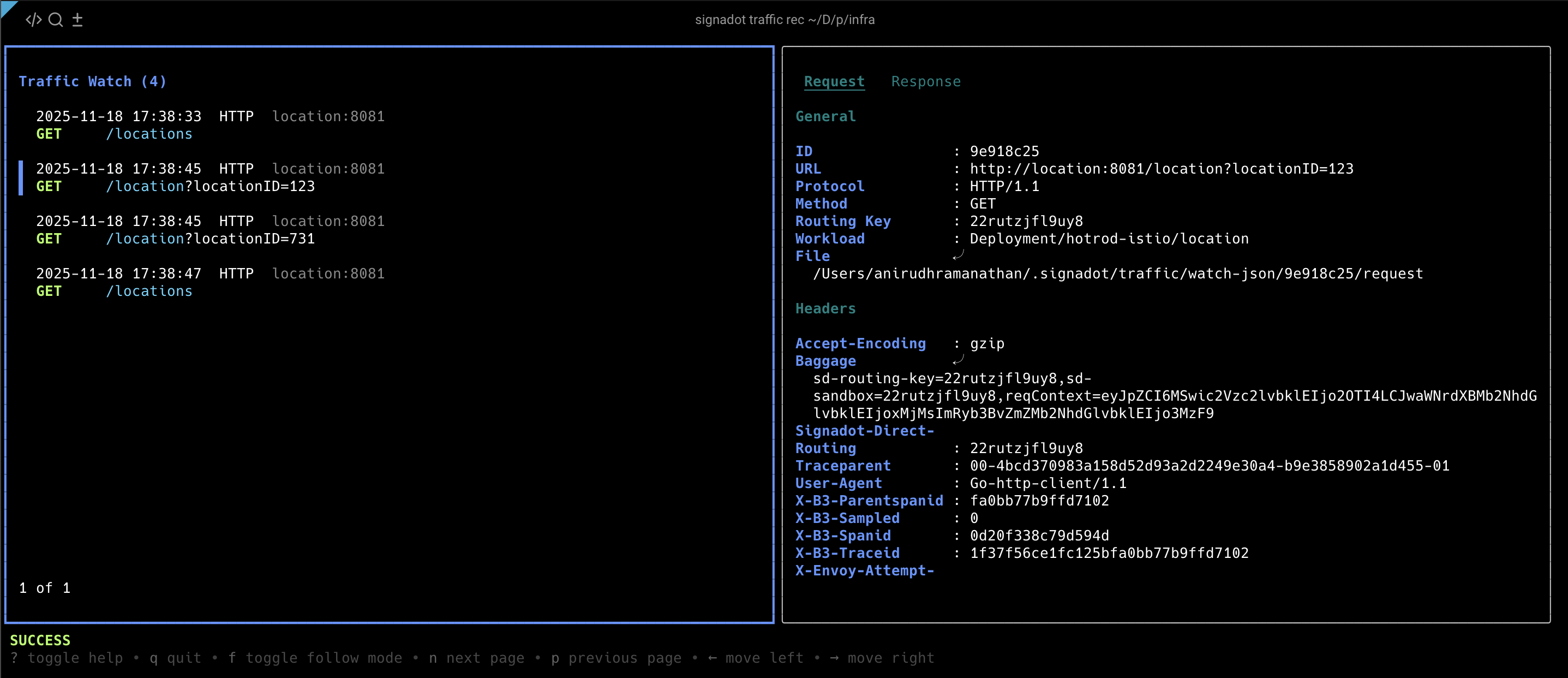

Start recording traffic with the interactive inspector:

signadot traffic record --sandbox pr-263-location --inspect --clean

This launches an interactive terminal UI (TUI) that displays traffic in real-time. With the recording active, use the Signadot Chrome Extension to set the routing key for your sandbox, then visit http://frontend.hotrod.svc:8080 and request a ride. You'll notice the location dropdown is broken or empty.

In the TUI, navigate to the GET /locations request and examine the response payload. You'll see it contains FullName instead of Name:

[

{

"id": 1,

"FullName": "Dog My Home",

"coordinates": "231,773"

},

{

"id": 123,

"FullName": "Lion Rachel's Floral Designs",

"coordinates": "115,277"

}

// ...

]

But the frontend expects the original format with the Name field:

[

{

"id": 1,

"name": "Dog My Home",

"coordinates": "231,773"

}

// ...

]

This mismatch is what's breaking the location dropdown! Press Ctrl+C to stop recording.

Recorded traffic is stored in ~/.signadot/traffic by default and can be inspected later using signadot traffic inspect. The files are in human-readable format, making it easy to build automation or tests from recorded traffic.

Step 4: Create a Local Server

Now that we understand the issue, let's create a local server that fixes the /locations endpoint while letting all other functionality fall back to the sandbox.

Create a file called location-fix.js:

const express = require('express');

const app = express();

app.use(express.json());

// Fixed location data with the correct "name" field

const LOCATIONS_DATA = [

{ id: 1, name: "Dog My Home", coordinates: "231,773" },

{ id: 123, name: "Lion Rachel's Floral Designs", coordinates: "115,277" },

{ id: 392, name: "Bear Trom Chocolatier", coordinates: "577,322" },

{ id: 567, name: "Eagle Amazing Coffee Roasters", coordinates: "211,653" },

{ id: 731, name: "Tiger Japanese Desserts", coordinates: "728,326" }

];

// Override the broken /locations endpoint

app.get('/locations', (req, res) => {

console.log('Overriding /locations request with fixed response');

// Set sd-override header to indicate we're handling this request

res.set('sd-override', 'true');

res.json(LOCATIONS_DATA);

});

// Let all other endpoints fall through to the sandbox

app.use((req, res) => {

console.log(`Letting ${req.method} ${req.path} fall through to sandbox`);

// Don't set sd-override header

// Middleware will automatically route to the sandbox

res.status(404).end();

});

const port = process.env.PORT || 8081;

app.listen(port, () => {

console.log(`Local override server running on port ${port}`);

});

The sd-override response header controls request routing: when set to true, your local response is returned to the caller and the request does not reach the sandbox. When absent or false, the request is forwarded to the sandbox. This allows surgical precision: override only what you're fixing, let everything else flow to the sandbox.

Install dependencies and start the local server:

Step 5: Create Local Override

Now connect the local server to the sandbox using the override command:

This creates a middleware that intercepts requests to the location workload, routes them to your local process first (running on localhost:8081), and falls back to the sandbox automatically for requests you don't handle.

Step 6: Test the Fix

With your routing key still active in the Chrome extension, refresh the HotROD UI at http://frontend.hotrod.svc:8080.

Now the location dropdown should work! The /locations call is being handled by your local server with the fixed field name, while all other functionality (driver management, route calculation, etc.) continues to use the sandbox. You can see this in action by watching the console output logs from location-fix.js.

You can now iterate on your fix by modifying location-fix.js, restarting the server, and refreshing the UI to see changes immediately, no need to build images or wait for deployments.

What Else Can You Do?

This traffic recording and override workflow works with any sandbox type: fork workloads (as shown here), sandboxes with local mappings where services run on your workstation, or virtual workloads that point to baseline services for near-zero cost traffic recording. You can even apply overrides to the baseline environment using virtual workloads, allowing you to test fixes against stable baseline services without creating any additional infrastructure.

Common use cases include:

- Testing API modifications: Override specific endpoints while keeping all other behavior unchanged

- Simulating error conditions: Override endpoints to return errors or timeouts for resilience testing

- Developing against unstable services: Mock unreliable dependencies locally while everything else uses cluster services

How It Works

The override middleware sits in the request path and provides intelligent routing:

- Traffic recording: The

trafficwatchmiddleware captures request/response pairs non-invasively - Traffic override: The

overridemiddleware intercepts requests and routes them based on your logic

For more details, see the Record and Inspect Traffic and API Override guides.

Cleanup

When you're done debugging:

- Stop the local override server (Ctrl+C in the terminal running

location-fix.js) - Stop the override connection (Ctrl+C in the terminal running

signadot local override) - Delete the sandbox:

signadot sandbox delete pr-263-location - Optionally disconnect from the cluster:

signadot local disconnect

Conclusion

You've successfully debugged a breaking change using traffic recording and local overrides! This approach lets you understand what broke and test fixes locally, without managing complex infrastructure.

To learn more:

- Record and Inspect Traffic Guide for advanced traffic recording patterns

- API Override Guide for more override techniques

- Virtual Workloads Reference for cost-effective baseline testing