Run Smart Tests on Sandboxes

Overview

In this quickstart, you'll learn how to use SmartTests to validate PR changes using Signadot's runtime behavior testing.

SmartTests are lightweight API tests written in Starlark that invoke REST APIs. They're designed to detect regressions by comparing the behavior of your modified service against the baseline version running in Kubernetes. The Signadot operator runs these tests against both versions simultaneously, and uses AI-powered semantic analysis to compare the responses and identify unexpected changes.

Using a sample application called HotROD, you'll learn how to detect regressions in the location service for each PR by:

- Set up managed runner groups for executing tests

- Write and run SmartTests to validate location service behavior

- Understand how Smart Diff compares responses using AI-powered semantic analysis

- Add explicit checks for specific requirements

This guide will demonstrate how SmartTests can help you catch regressions early by comparing actual service behavior in live Kubernetes environments.

Setup

Before you begin, you'll need:

- Signadot account (sign up here)

- Signadot CLI (installation instructions)

- A Kubernetes cluster

Option 1: Set up on your own Kubernetes cluster

Using minikube, k3s, or any other Kubernetes cluster:

- Install the Signadot Operator: Install the operator into your cluster.

- Install the HotROD Application: Install the demo app using the appropriate overlay:

- Istio

- Linkerd / No Mesh

kubectl create ns hotrod --dry-run=client -o yaml | kubectl apply -f -

kubectl -n hotrod apply -k 'https://github.com/signadot/hotrod/k8s/overlays/prod/istio'kubectl create ns hotrod --dry-run=client -o yaml | kubectl apply -f -

kubectl -n hotrod apply -k 'https://github.com/signadot/hotrod/k8s/overlays/prod/devmesh'

Option 2: Use a Playground Cluster

Alternatively, provision a Playground Cluster that comes with everything pre-installed.

Demo App: HotROD

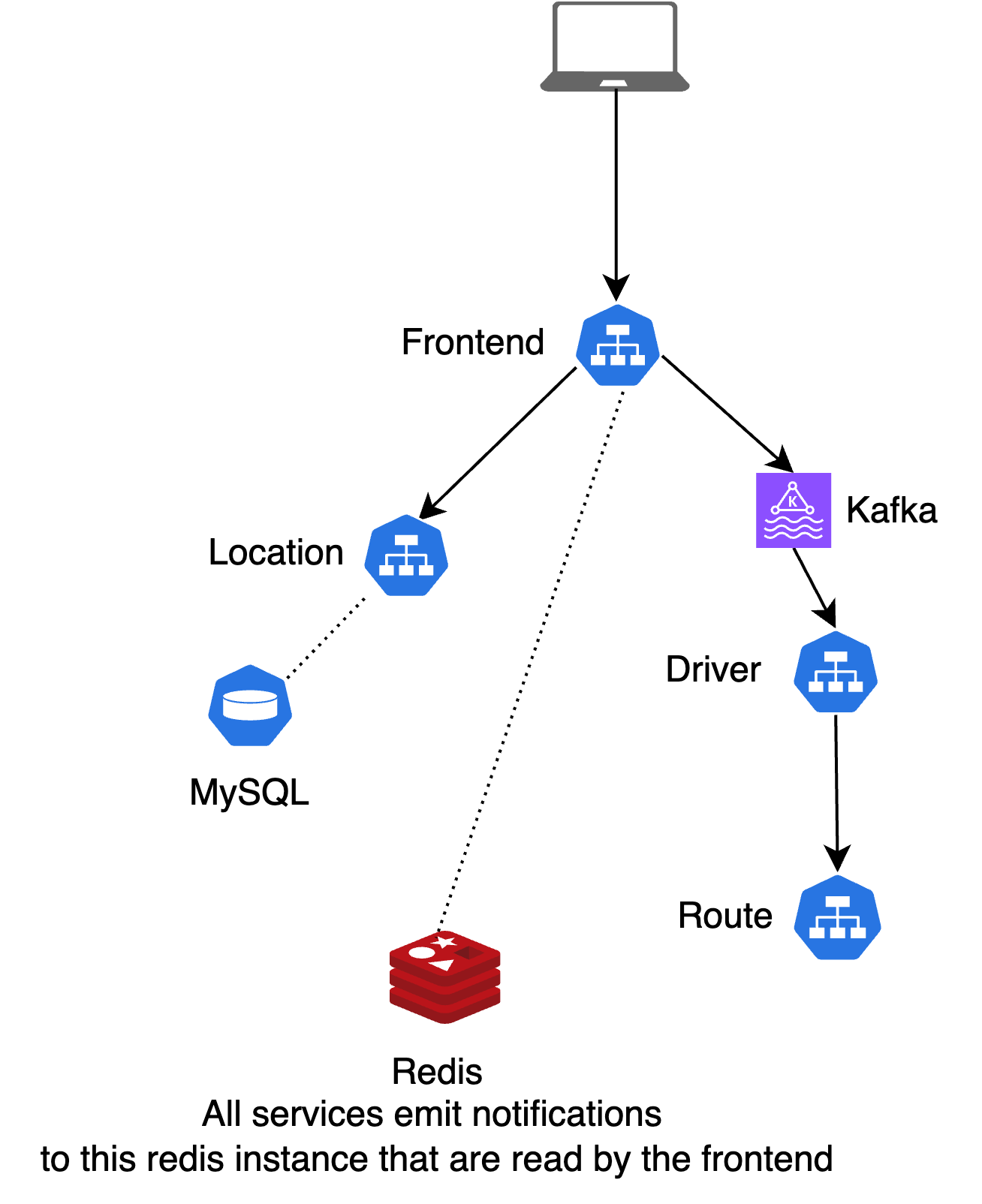

HotROD is a ride-sharing application with four microservices:

frontend: Web UI for requesting ridesdriver: Manages drivers in the systemroute: Calculates ETAslocation: Provides available pickup and dropoff locations for riders

The services use Kafka as a message queue, and Redis and MySQL for data storage.

We'll focus on the relationship between the frontend and location services, as the frontend depends on the location service to provide available pickup and dropoff locations for ride requests.

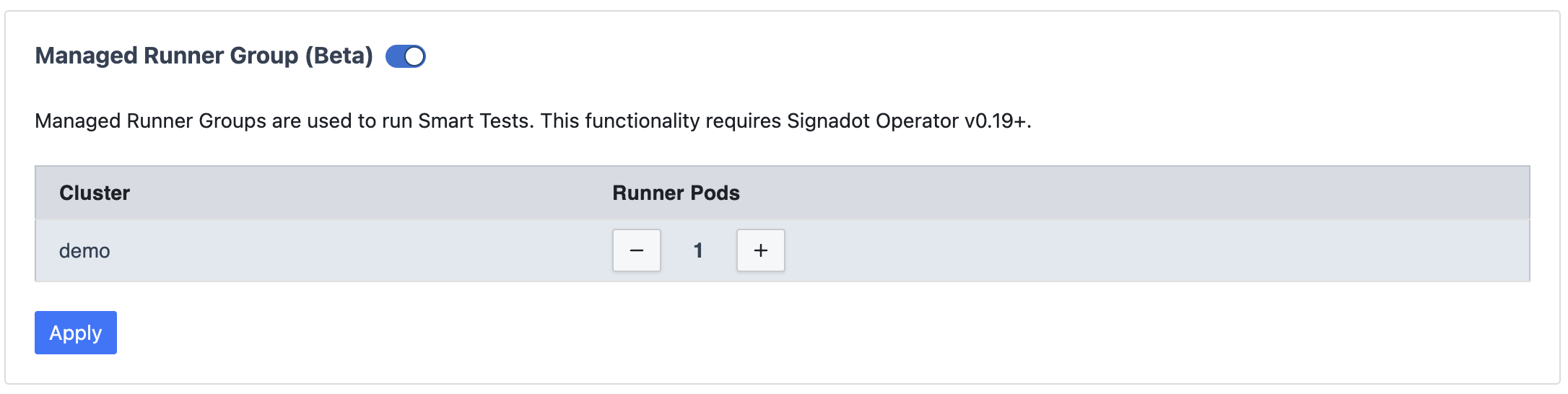

Step 1: Enable Managed Runner Groups

Before writing tests, you need to enable managed runner groups in your Signadot account. These runners execute your Smart Tests within the cluster.

- Go to the Settings page in your Signadot dashboard.

- Enable the "Managed Runner Groups" option.

This setting enables Signadot to automatically create and manage test runners in your cluster. The system will set up at least one pod within your cluster to execute the tests.

Step 2: Write Your First SmartTest

In the HotROD application, the frontend service depends on the location service to get pickup and dropoff locations. If the location service changes its API behavior in a PR, it could break the application.

Runtime behavior testing helps us ensure that such changes are caught early by comparing the actual behavior of the modified service against the baseline. Let's create a simple SmartTest that validates the location service behavior.

Create a file named location-smarttest.star:

res = http.get(

url="http://location.hotrod.svc:8081/locations",

capture=True, # enables Smart Diff

name="getLocations"

)

ck = smart_test.check("get-location-status")

if res.status_code != 200:

ck.error("bad status code: {}", res.status_code)

print(res.status_code)

print(res.body())

This test:

- Makes a request to the location service's

/locationsendpoint - Enables Smart Diff with

capture=Trueto automatically compare responses between baseline and sandbox - Adds an explicit check to verify that the status code is 200

- Prints the response for debugging purposes

The key element here is the capture=True parameter. When this test runs on a sandbox containing a modified version of the location service, it will automatically capture both the request and response, compare them with the baseline using AI-powered semantic analysis, and identify any unexpected changes.

Step 3: Run the Test on Baseline

First, let's run this test directly on the baseline to ensure it passes:

signadot smart-test run -f location-smarttest.star --cluster=<your-cluster>

You should see output similar to:

Created test run with ID "..." in cluster "...".

Test run status:

✅ ...ts/scratch/quickstart2/location-smarttest.star [ID: ...-1, STATUS: completed]

Test run summary:

* Executions

✅ 1/1 tests completed

* Checks

✅ 1 checks passed

This confirms that the test is indeed able to reach the location service on baseline and get a 200 response.

Step 4: Test a Breaking Change

Now, let's simulate a breaking change in the location service. We'll create a sandbox that modifies the location service to return a different response format (source: @signadot/hotrod#263):

name: location-breaking-format

spec:

description: "Modified location service with breaking API changes"

cluster: "@{cluster}"

forks:

- forkOf:

kind: Deployment

namespace: hotrod

name: location

customizations:

images:

- image: signadot/hotrod:ee63f5381680e089fec075e9adb8f4c7c0cda38f-linux-amd64

container: hotrod

- Run with UI

- Run with CLI

Click the button below to open and run this spec on Create Sandbox UI.

Run the below command using Signadot CLI.

# Save the sandbox spec as `location-breaking-change.yaml`

# Note that <cluster> must be replaced with the name of the linked cluster in

# signadot, under https://app.signadot.com/settings/clusters.

signadot sandbox apply -f ./location-breaking-change.yaml --set cluster=<cluster>

This sandbox uses a version of the location service where fields have been renamed, which should break the contract with the frontend service.

Now, run the same test against this sandbox:

signadot smart-test run -f location-smarttest.star --sandbox location-breaking-format --publish

This time, you'll see output like:

Created test run with ID "..." in cluster "...".

Test run status:

✅ ...ts/scratch/quickstart2/location-smarttest.star [ID: ..., STATUS: completed]

Test run summary:

* Executions

✅ 1/1 tests completed

* Diffs

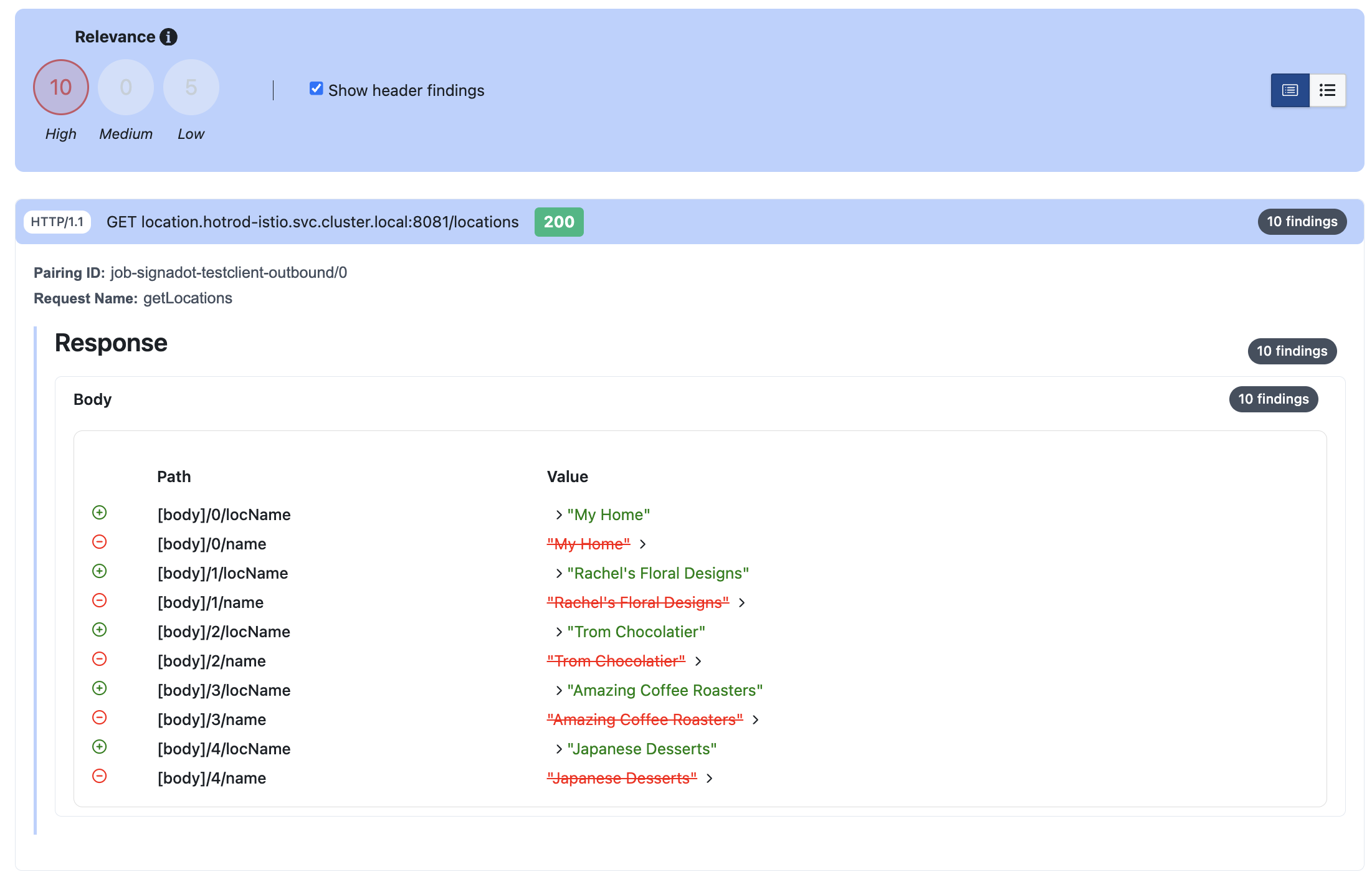

⚠️ 10 high relevance differences found

* Checks

✅ 1 checks passed

Notice something interesting here: our basic check for HTTP 200 status code passes (the service is still responding successfully), but Smart Diff has detected 10 high relevance differences between the baseline and sandbox responses. This is exactly what runtime behavior testing is designed to catch - changes that might not cause outright failures but could break consumers of the API.

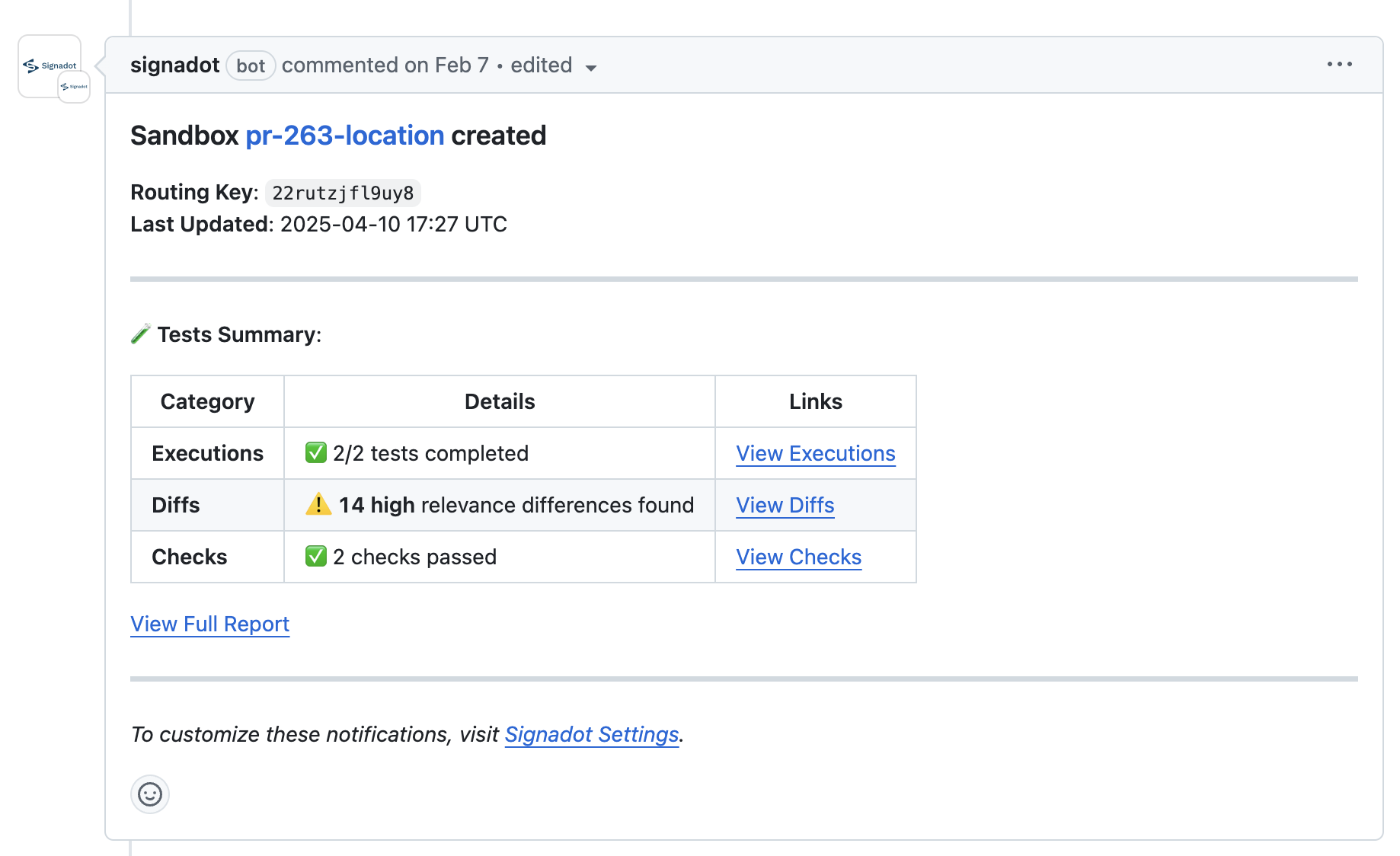

To see exactly what changed, go to your sandbox page and select the "Smart Tests" tab (location-breaking-format sandbox). You'll see a detailed breakdown of the differences:

The Smart Diff analysis shows that while the API is still functioning, the response structure has changed in ways that could break consumers - exactly the kind of regression we want to catch before it reaches production or even staging.

Understanding Smart Test Types

Smart Tests encompass two complementary approaches to PR validation: Smart Diff and Checks.

Smart Diff: AI-Powered Behavior Comparison

Smart Diff tests, as we saw above, automatically detect unexpected changes by comparing service behavior between baseline and sandbox environments using AI-powered semantic analysis. The key advantages are:

- No maintenance required - tests don't need updating as your baseline evolves

- AI-powered difference detection that intelligently ignores non-breaking changes (like timestamps)

- Automatic detection of new required fields, missing fields, type changes, and unexpected field values

Checks: Explicit Behavior Validation

While Smart Diff handles most cases automatically, sometimes you want to explicitly verify specific requirements. Let's add a check to ensure the name field is always present:

res = http.get(

url="http://location.hotrod.svc:8081/locations",

capture=True,

name="getLocations"

)

# Check status code

ck = smart_test.check("get-location-status")

if res.status_code != 200:

ck.error("bad status code: {}", res.status_code)

# Check name field presence

ck = smart_test.check("location-name-field")

locations = json.decode(res.body())

for loc in locations:

if "name" not in loc:

ck.error("location missing required 'name' field")

print(res.status_code)

print(res.body())

Running this enhanced test against our breaking change sandbox:

signadot smart-test run -f location-smarttest.star --sandbox location-breaking-format --publish

Now we'll see both the Smart Diff results and our explicit check failing:

Created test run with ID "..." in cluster "...".

Test run status:

✅ ...ts/scratch/quickstart2/location-smarttest.star [ID: ..., STATUS: completed]

Test run summary:

* Executions

✅ 1/1 tests completed

* Diffs

⚠️ 10 high relevance differences found

* Checks

❌ 1 checks passed, 1 failed

When you run tests with the --publish flag, results are automatically associated with the sandbox and can be surfaced in your development workflow through pull request comments and CI/CD checks.

How Smart Tests Work

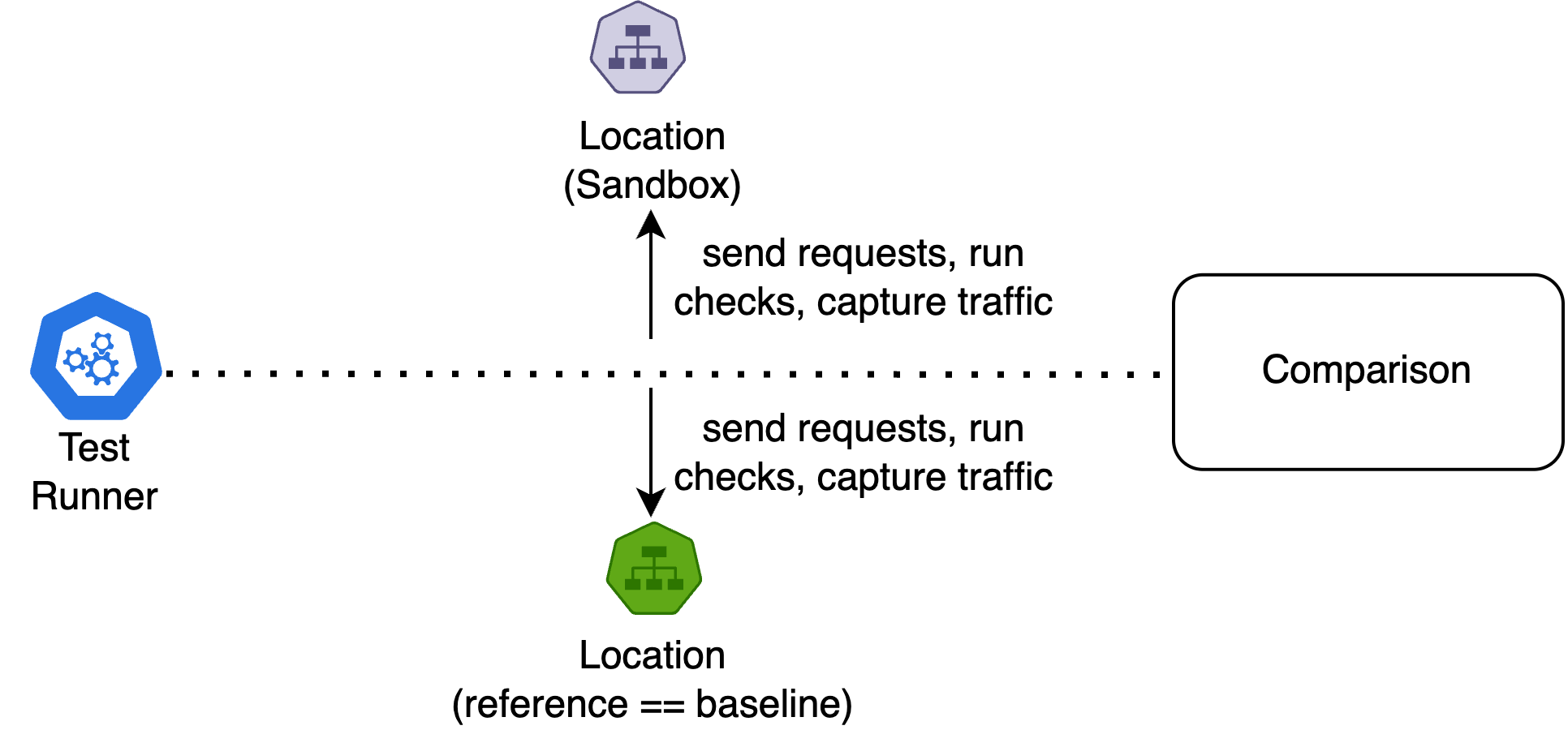

When you run a Smart Test, Signadot creates a "reference sandbox" that mirrors your baseline environment. The test traffic is then sent to both this reference sandbox and the sandbox containing your modified microservice simultaneously. This allows Smart Diff to perform a precise comparison of the requests and responses.

Smart Diff uses AI models to analyze the traffic and compare it against historical captures from your baseline environment. This historical context helps Smart Diff classify the importance of any differences it finds, assigning relevance scores based on how unexpected the changes are. This goes beyond traditional contract testing by detecting not just structural changes but also unexpected field values and behavioral differences. To learn more about how traffic is captured and analyzed, see our Traffic Capture Guide.

Any checks you've defined in your test run against both sandboxes - the reference sandbox and the sandbox containing your modified microservice. This dual execution ensures that any failures are truly due to the changes you're testing, rather than environmental factors.

Conclusion

In this quickstart, you've learned how to:

- Set up runtime behavior testing for your microservices

- Use Smart Diff to automatically detect unexpected changes using AI-powered analysis

- Add explicit checks for specific requirements

- Surface test results in your development workflow

Runtime behavior testing helps catch regressions early by comparing actual service behavior in live Kubernetes environments. To get started in your environment:

- For comprehensive PR validation with Git integration and CI/CD, follow our SmartTests in Git Guide

- For quick API validation without Git setup, try Synthetic API Tests in the UI

To learn more:

- Explore the Smart Test CLI Guide for detailed command usage

- Check out our Smart Test Reference for in-depth documentation on test capabilities