The Best AI Agent Frameworks for 2026

.png)

2025 will be looked back on as the year that we moved from AI chatbots to AI agents. We have moved past the initial excitement of generating text and code snippets and entered a phase of rigorous engineering. The industry focus has shifted from asking if an agent can be built, to determining how it can withstand the demands of production.

This shift exposes a gap between experimental prototypes and enterprise software. Engineering teams now require robust tools that provide reliability and observability rather than just API wrappers. Various agent frameworks have emerged to fill that gap, each with its own pros and cons.

To identify the best AI agent framework for your needs in 2026, you have to look beyond the hype and focus on the architecture of your stack and what kind of agent you plan to build.

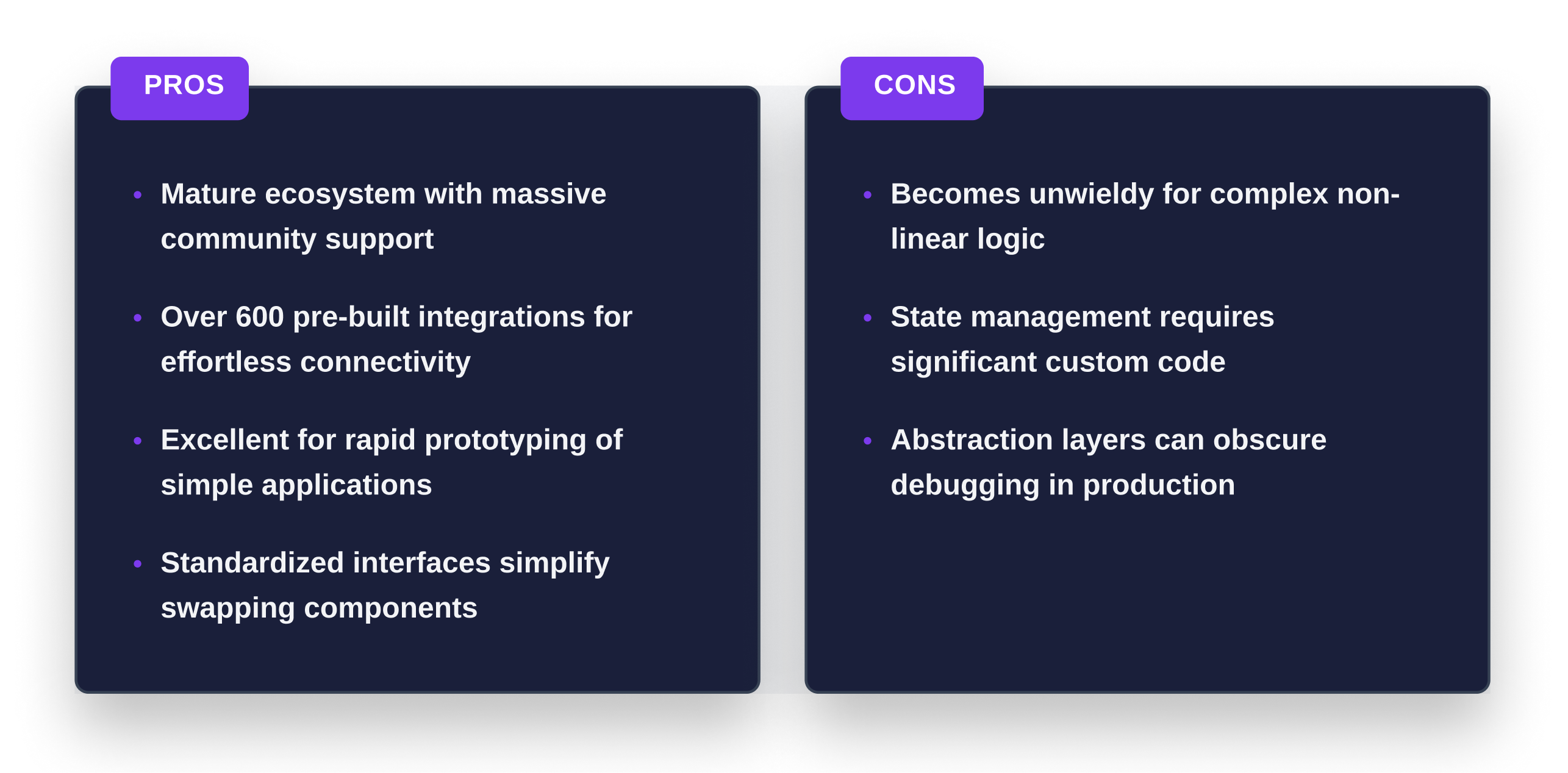

LangChain

Best for linear chains and simple applications

LangChain remains the foundation of the agent ecosystem. It is the most mature option with a massive community and over 600 integrations. If you need to connect a model to a specific database or API, LangChain probably has a tool for it.

The framework excels at linear chains where logic flows in a predictable line. Taake a standard Retrieval Augmented Generation (RAG) use case for summarizing a PDF, for example. The application reads the file, stores chunks in a vector database, and retrieves them to generate an answer. LangChain handles this sequence effortlessly.

It also provides a standard interface that simplifies initial development, allowing you to build prototypes quickly. However, this simplicity comes with a trade-off. While it handles linear sequences well, it can become unwieldy for complex non-linear workflows. Managing state across branching paths often requires significant custom code.

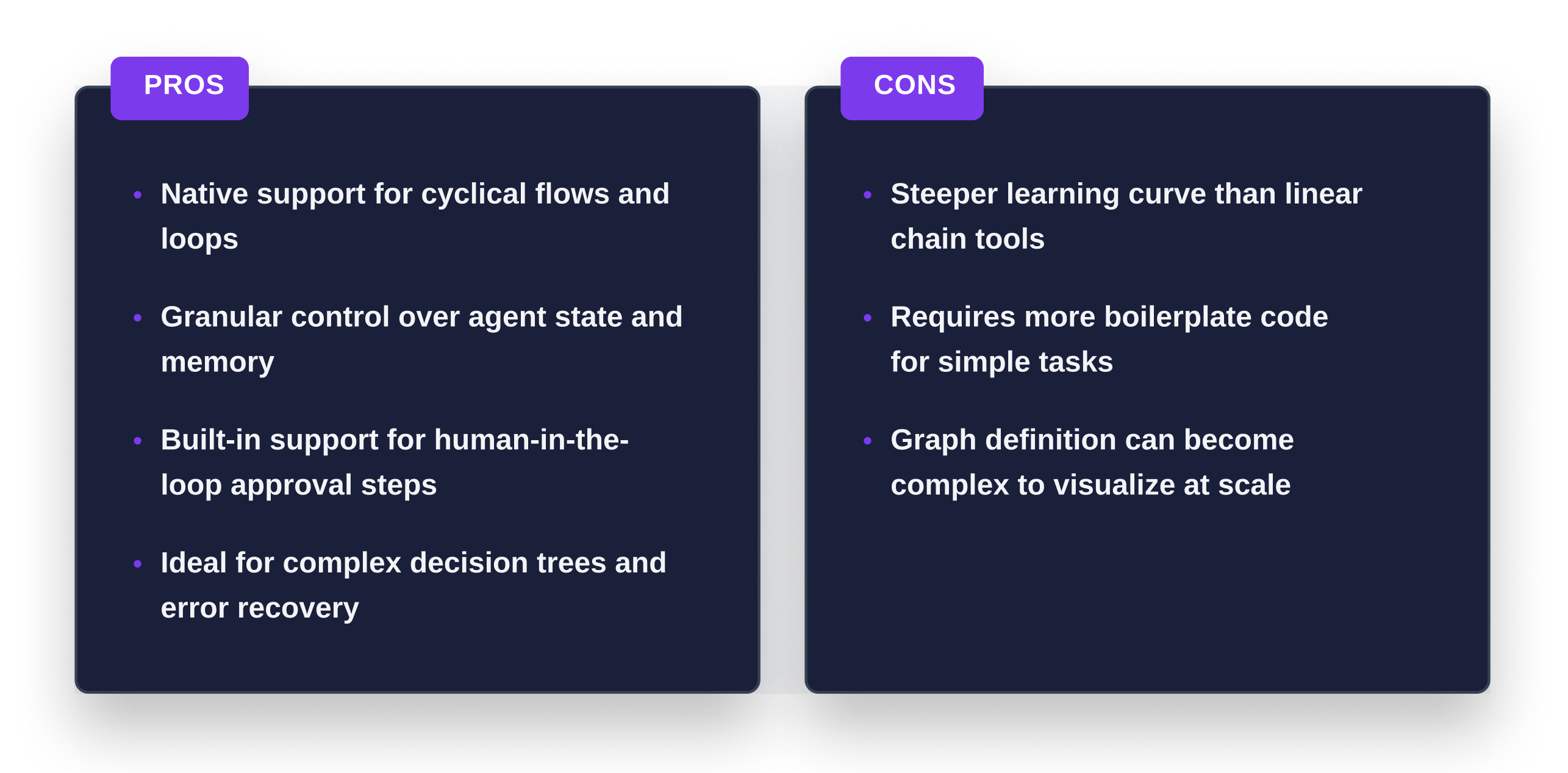

LangGraph

Best for complex, stateful agents and control loops

LangGraph is the evolution of LangChain designed for stateful agents. It uses a graph-based architecture that allows developers to define application flow as nodes and edges, supporting cyclical flows that are impossible in traditional chains.

This is critical for resilient agents that can handle production context. Consider a customer support bot resolving a shipping issue. The agent might check an order status and need to loop back to the user if the ID is incorrect or branch to a claims process if the package is lost. LangGraph allows you to map this decision tree explicitly.

You can define specific nodes for checks and edges for loops, giving you fine-grained control over execution. You can even implement human-in-the-loop patterns where the agent waits for approval. This makes it the premier choice for mission-critical applications where reliability is essential.

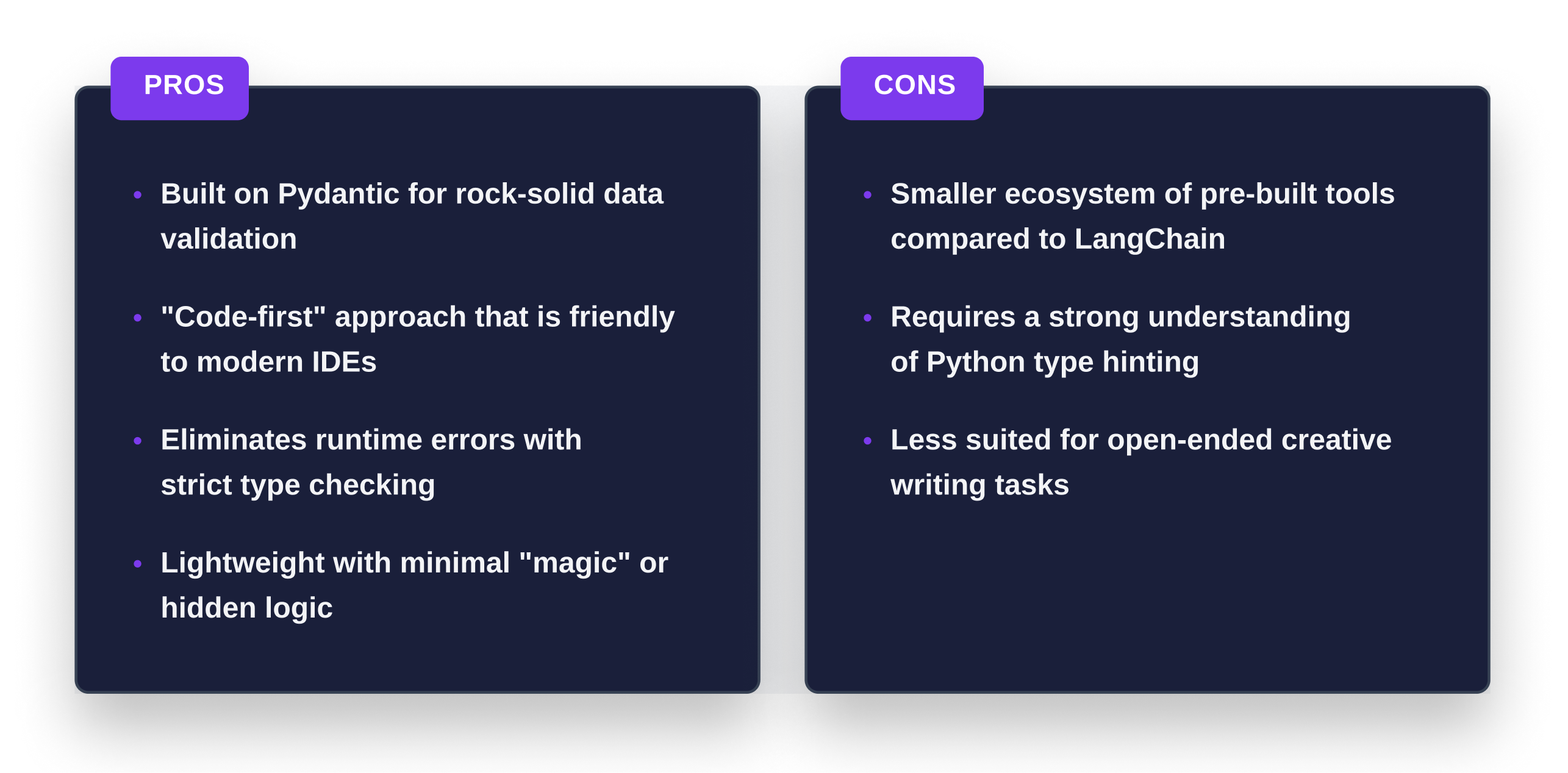

PydanticAI

Best for production-grade, type-safe agents

PydanticAI has emerged as a favorite for engineers who prioritize reliability and code quality over "magic." Built by the team behind Pydantic—the validation library used by virtually every Python AI tool—this framework brings rigorous type safety to agent development.

It excels in scenarios where the structure of data is just as important as the content. If you are building an agent that must output a strictly formatted JSON object to trigger a bank transaction or update a CRM record PydanticAI is unmatched. It forces you to define your data schemas upfront ensuring that the agent's output always matches your system's requirements.

Unlike other frameworks that hide logic behind heavy abstractions PydanticAI feels like standard Python. It integrates seamlessly with modern IDEs providing autocomplete and compile-time error checking that drastically reduces runtime bugs.

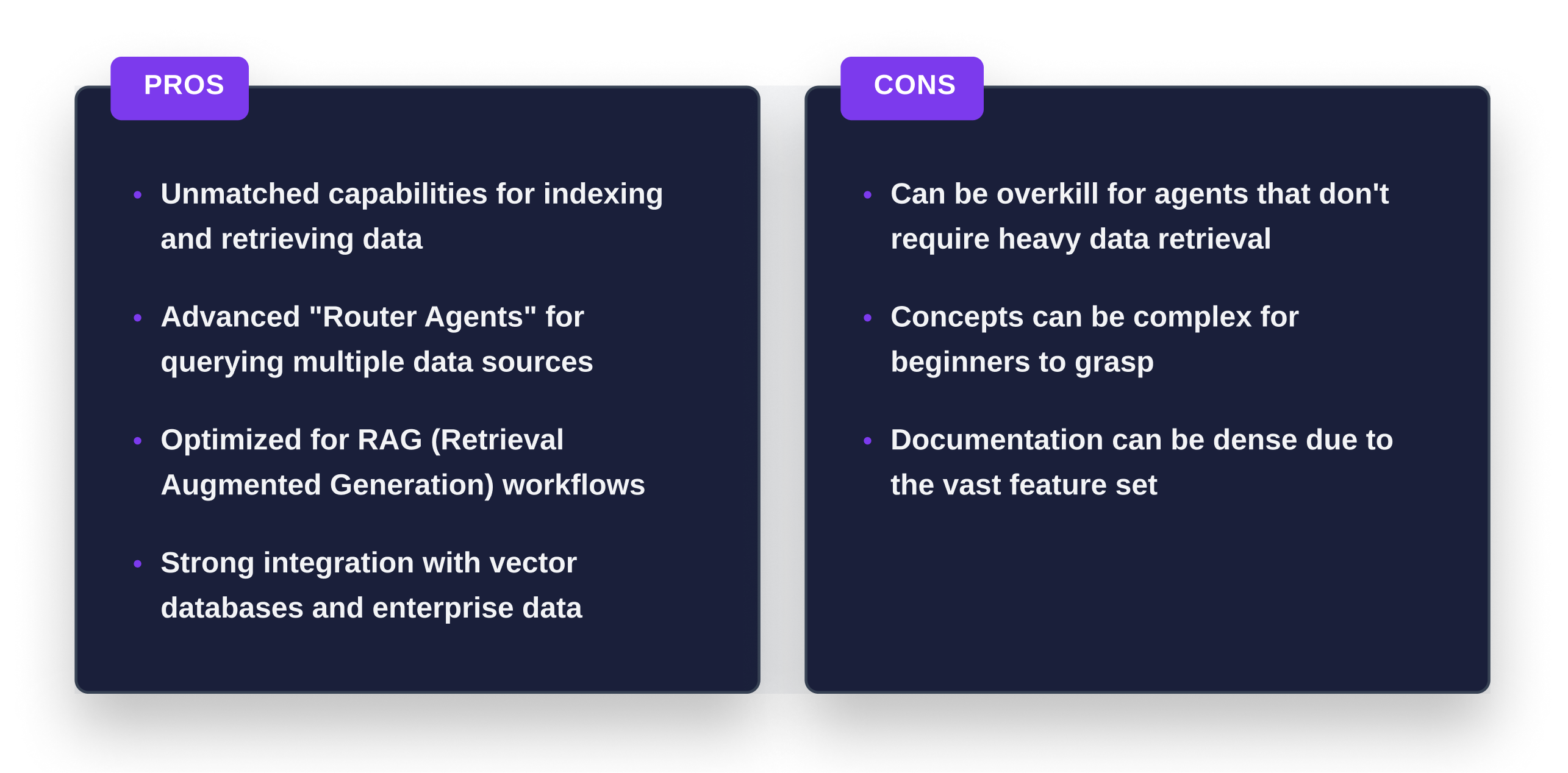

LlamaIndex

Best for data-centric RAG agents

While other frameworks focus on the conversation LlamaIndex focuses on the data. It is the premier framework for building agents that need to traverse massive datasets to find answers. If your primary goal is to build an agent that can read through thousands of legal contracts or technical manuals LlamaIndex is the superior choice.

It treats data sources as first-class citizens. Its "Router Agents" can intelligently decide which database or document store to query based on the user's question. For example a financial agent could determine whether to query a SQL database for recent transaction data or search a vector store for a PDF policy document.

This data-first approach makes it incredibly powerful for enterprise search and knowledge management applications where accuracy of retrieval is the primary metric of success.

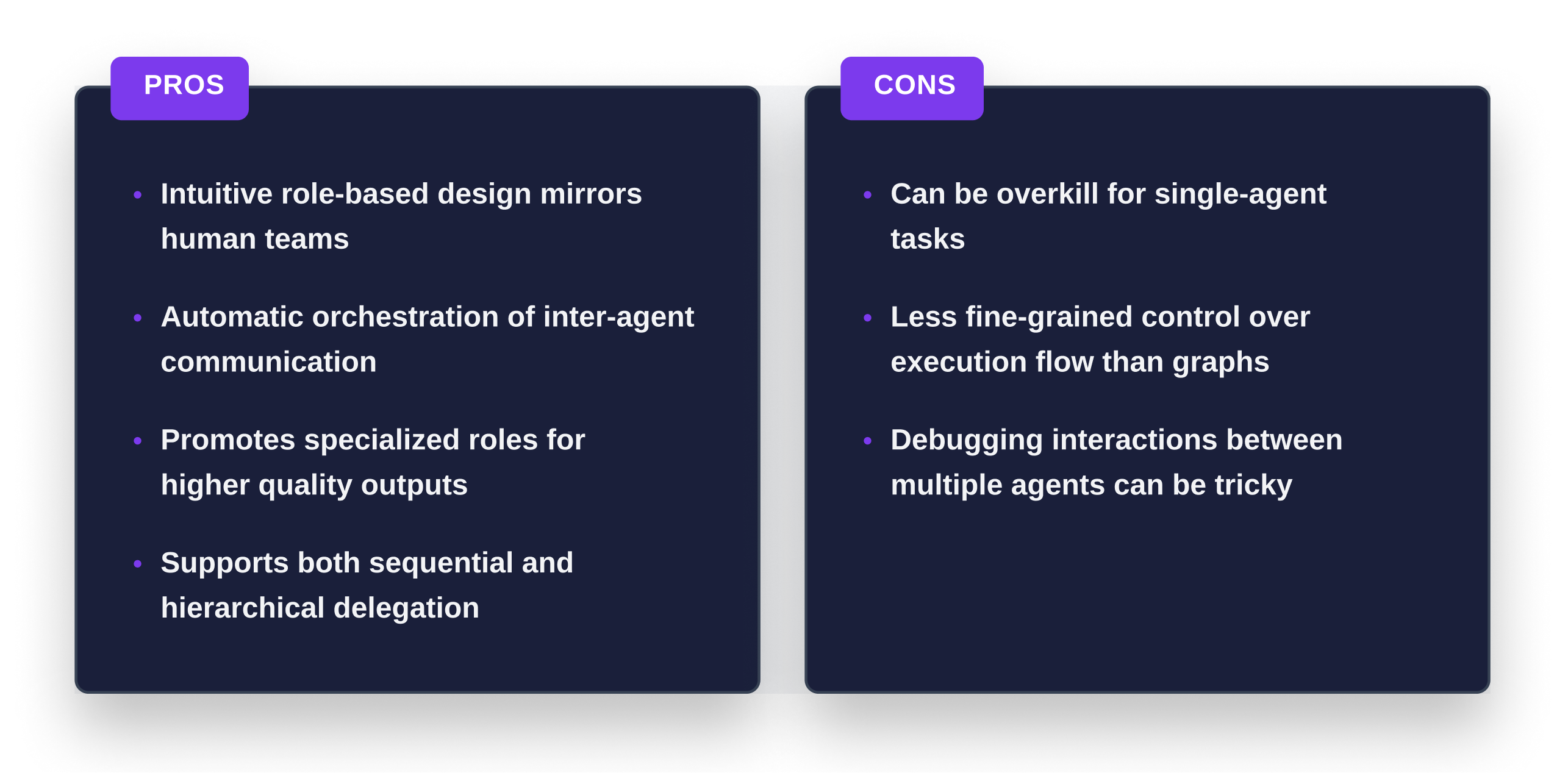

CrewAI

Best for role-based, multi-agent swarms

CrewAI models software architecture after a human organization. You define a crew of employees where each agent has a specific role and goal. This design forces you to think in terms of delegation and teamwork.

This framework is effective for open-ended collaborative tasks. Imagine a marketing team creating a blog post. You could spin up a Researcher agent to find data and a Writer agent to craft the narrative, and an Editor agent to review the draft. CrewAI handles the orchestration automatically, ensuring outputs are passed correctly between agents.

It supports both sequential and hierarchical processes. This structure often leads to higher-quality outputs because each agent focuses solely on its narrow expertise. It abstracts away low-level communication protocols, allowing you to focus on strategy.

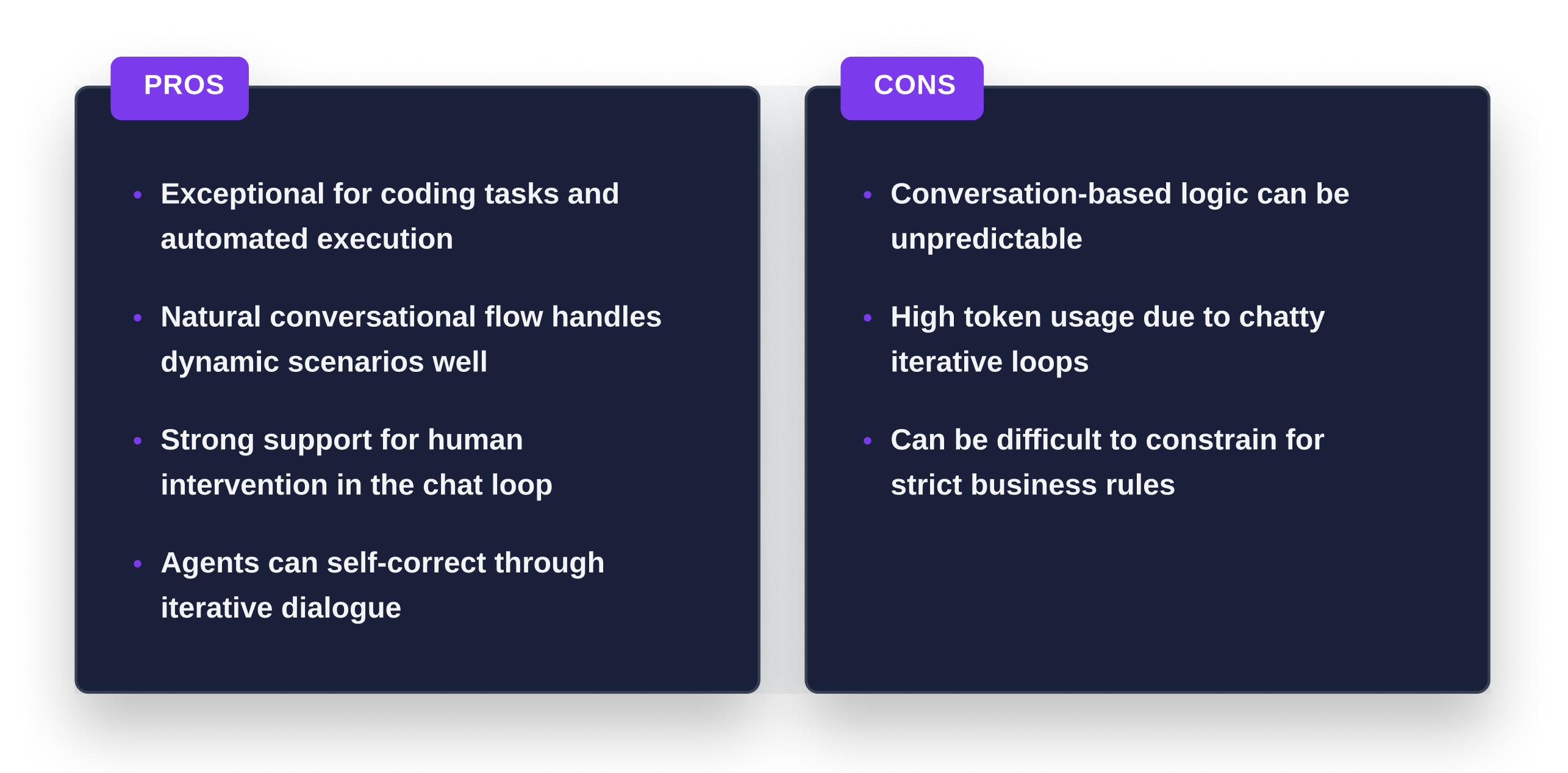

AutoGen

Best for conversation-driven flows

Microsoft’s AutoGen uses conversation as the primary mechanism for computation. Agents with different personas collaborate through a chat interface to solve problems. This supports diverse patterns, including dynamic group chats.

AutoGen is exceptionally strong for coding tasks. In a software development simulation, you can create a User Proxy acting as a manager and an Assistant acting as a developer. If you ask them to write a snake game, the Assistant writes the code and the User Proxy executes it.

If the code fails, the User Proxy returns the error message, and the Assistant generates a fix. This iterative loop continues until the code runs successfully. This ability to execute code and learn from results makes AutoGen particularly powerful for autonomous systems.

The Infrastructure Bottleneck

Building the agent is only the first step. The real challenge begins when you need to test it. Standard evaluations alone aren’t enough because they only measure text output. They don’t test the actions the agent takes or the side effects it creates in your database. To truly validate an agent, you need to test it against your full topology.

This poses a critical workflow problem, though. Most teams rely on a single shared staging environment that is already bottlenecked. This only gets worse when you add agents to the mix.

Agents often perform multi-step actions that modify state across various services. If multiple developers and agents attempt to test simultaneously, they run into each other and cause staging to break. This contention forces teams into a queue where both engineers and agents have to wait for a clear window to validate their work.

Conclusion

The decision of which framework to adopt depends on the complexity of your workflow and the kind of agent you are building. You have to weigh the need for simple linear execution against the requirements for complex state management or role-based collaboration. Success comes from selecting the engine that best aligns with your specific architectural goals.

However, selecting the engine is only half the battle. Regardless of the agent framework you choose, to ship reliable agents to production at scale, you have to solve the infrastructure challenge of how to fully validate them without adding to your existing CI/CD bottlenecks.

Signadot bridges the gap between the inner loop of agent development and the fidelity of production. This ensures that your team can innovate rapidly on agents and validate them against real services and data without waiting for CI.

Subscribe

Get the latest updates from Signadot