Why the MCP Server Is Now a Critical Microservice

.jpg)

In my previous article on preparing CI/CD pipelines to ship production-ready agents, I argued that we cannot ship agents to production that are driven primarily by non-deterministic models. Instead, they must be built as robust workflows where the large language model (LLM) is introduced strategically at specific steps within a deterministic control flow.

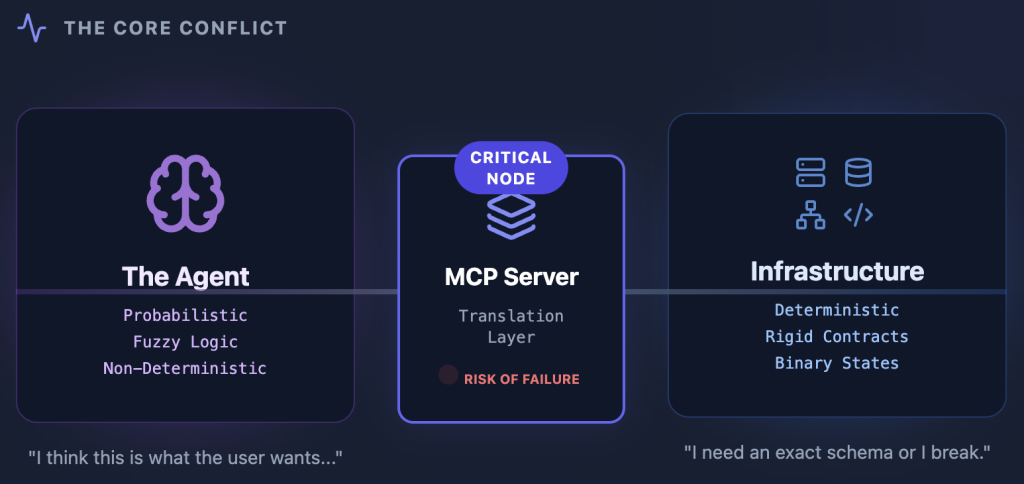

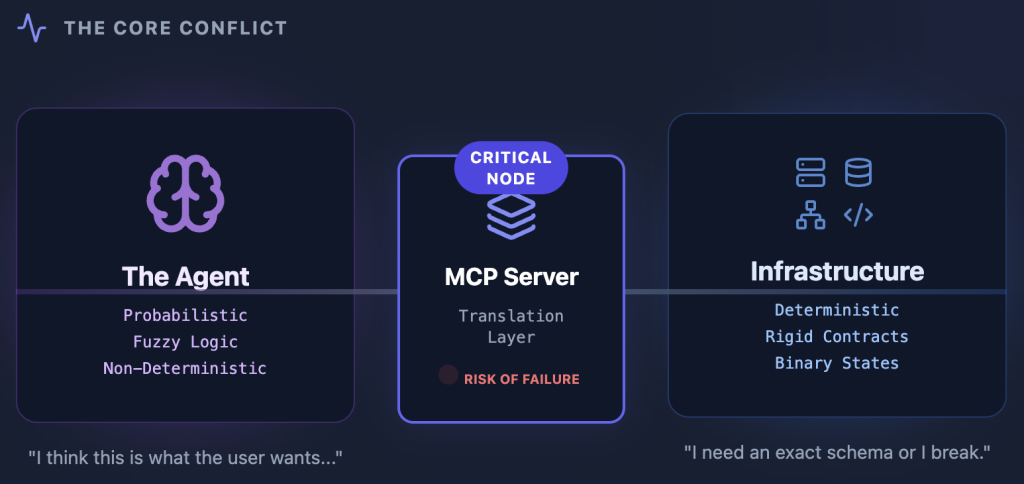

Now we must examine the most critical node in that framework.

The Model Context Protocol (MCP) server facilitates interactions between the probabilistic LLM node and the deterministic microservices workflow. It acts as the translation layer connecting the reasoning engine to external data and tools.

The model is one half of the agent architecture. The MCP server is the other half. While model evaluations validate the reasoning engine, they cannot verify the system as a whole. Validation strategies relying on mocks fail to test the agent as a workflow.

Reliability of the end-to-end workflow is paramount when shipping agents to production. The MCP server is the critical node in this topology, acting as both sensory organ and effector arm. If it transmits ambiguous signals, the agent acts erratically. It hallucinates. It degrades user trust. It causes critical business errors.

The Architectural Shift From Contracts to Semantics

To understand the failure risks, we must examine how the MCP server alters service contracts.

Service-to-service communication is deterministic in standard microservices environments. Service A calls Service B using a strict REST or gRPC contract. The interaction is rigid. It is predictable. It is easily validated.

An agentic workflow inverts this.

The agent is a nondeterministic actor operating on probabilistic logic. It decides when to call a tool based on semantic context provided by the MCP server. The server exposes a world model rather than just an API endpoint.

This makes the MCP server a distinct type of microservice. It is a translation layer converting probabilistic intent into deterministic action. This responsibility manifests in three operations requiring rigorous engineering.

1. Defining Capability Boundaries

The MCP server defines agent capabilities through JSON-RPC tool definitions.

If the server exposes a schema with vague descriptions, the agent cannot formulate a valid execution plan. A human developer might read documentation to clarify an API field, but the agent relies solely on metadata exposed by the list_tools capability.

Consider a payment operations agent handling refunds. A fragile MCP implementation might expose a tool named refund_user to process a refund.

This lacks semantic density. The model does not know whether this applies to a full or partial refund or if it handles tax calculation. It is a black box.

A robust implementation defines the boundary with precision. It exposes process_prorated_subscription_refund. The description explicitly states that it calculates the remaining balance for the current billing cycle and issues a credit.

The reasoning chain breaks without this specificity.

2. Governing the Context Economy

The MCP server governs the context window. It must retrieve backend data and format it for LLM consumption.

This data engineering challenge requires differentiating between signal and noise.

Providing a raw 5 MB JSON dump dilutes agent attention. It wastes tokens and increases latency. Conversely, providing too little data causes the agent to hallucinate missing details.

The server must act as a transformation layer that optimizes raw data into context-ready snippets.

3. Executing Side Effects

The MCP server executes actions for the agent. When an agent triggers a deployment, the server is the execution mechanism.

A confused agent can trigger destructive loops if the server lacks idempotency or error handling. The server must implement safeguards preventing the model from erroneously retrying state-changing operations.

The Engineering Rigor Required for Production

Shipping agents to production requires due diligence exceeding standard microservice development. This is most visible in return state ambiguity.

A traditional API might return a 404 error code, which a client handles with logic. An MCP server faces a more complex challenge. It must return a natural language description or structured tool result explaining why the action failed.

If the server returns a generic stack trace, the agent may retry endlessly or invent a plausible but incorrect reason for failure. The error message becomes part of the prompt for the next conversation turn. It must be engineered as carefully as the system prompt.

Latency is also critical. Agents operate in a sequential thought loop. They reason. They call a tool. They wait. They reason again.

A slow server breaks the cognitive chain. High latency causes context timeouts, forcing the agent to abandon workflows. This leaves systems in inconsistent states.

Scaling Testing via Multitenancy

The nondeterministic nature of the client makes testing difficult. Traditional unit tests are insufficient.

Unit testing a Python function to ensure valid JSON output does not prove that an agent will understand how to use it. Mocks are equally ineffective. They decouple the test from real system behavior and create false confidence.

The only way to validate an MCP server is through rigorous end-to-end testing against real dependencies. However, spinning up full cluster replicas for every test is rarely feasible.

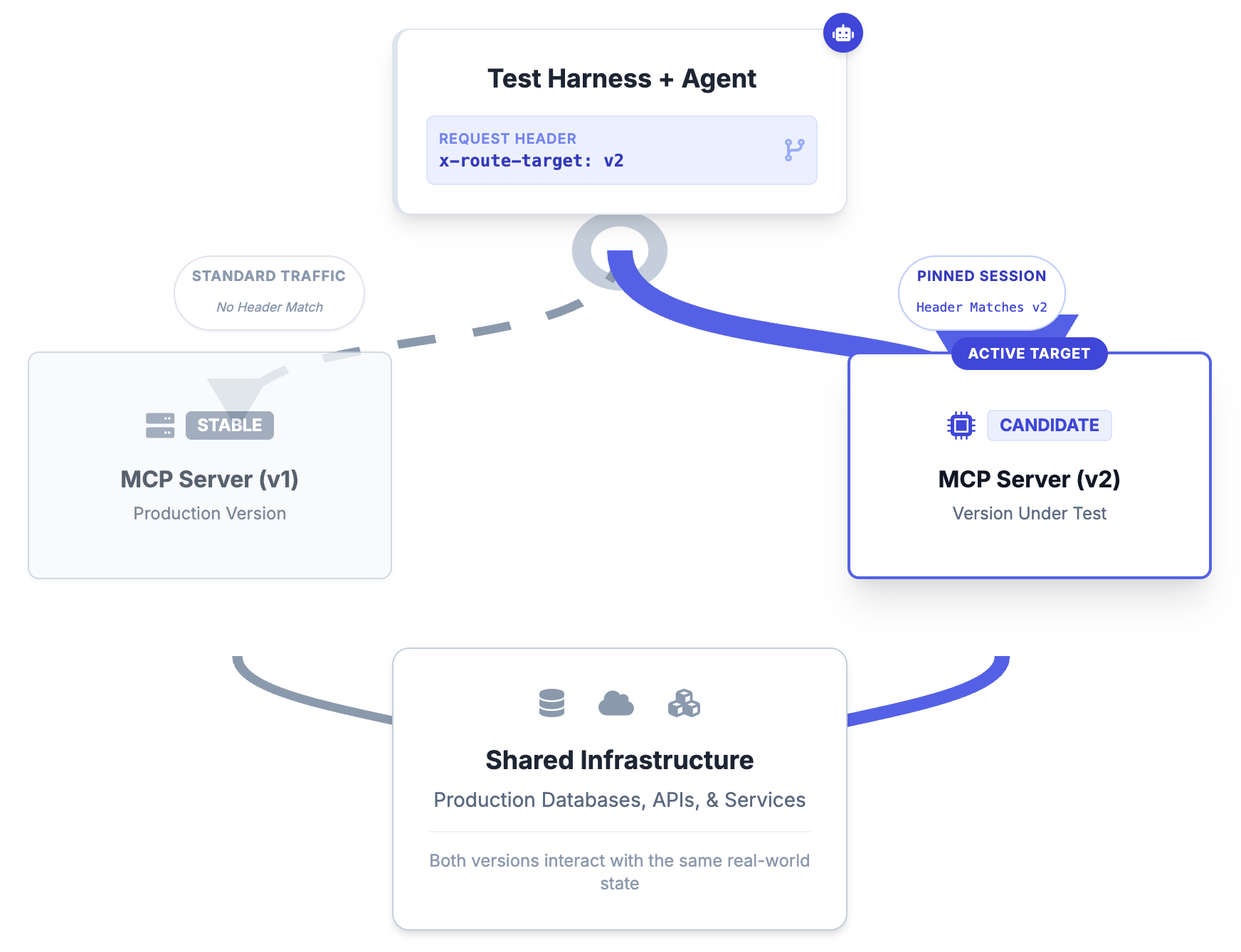

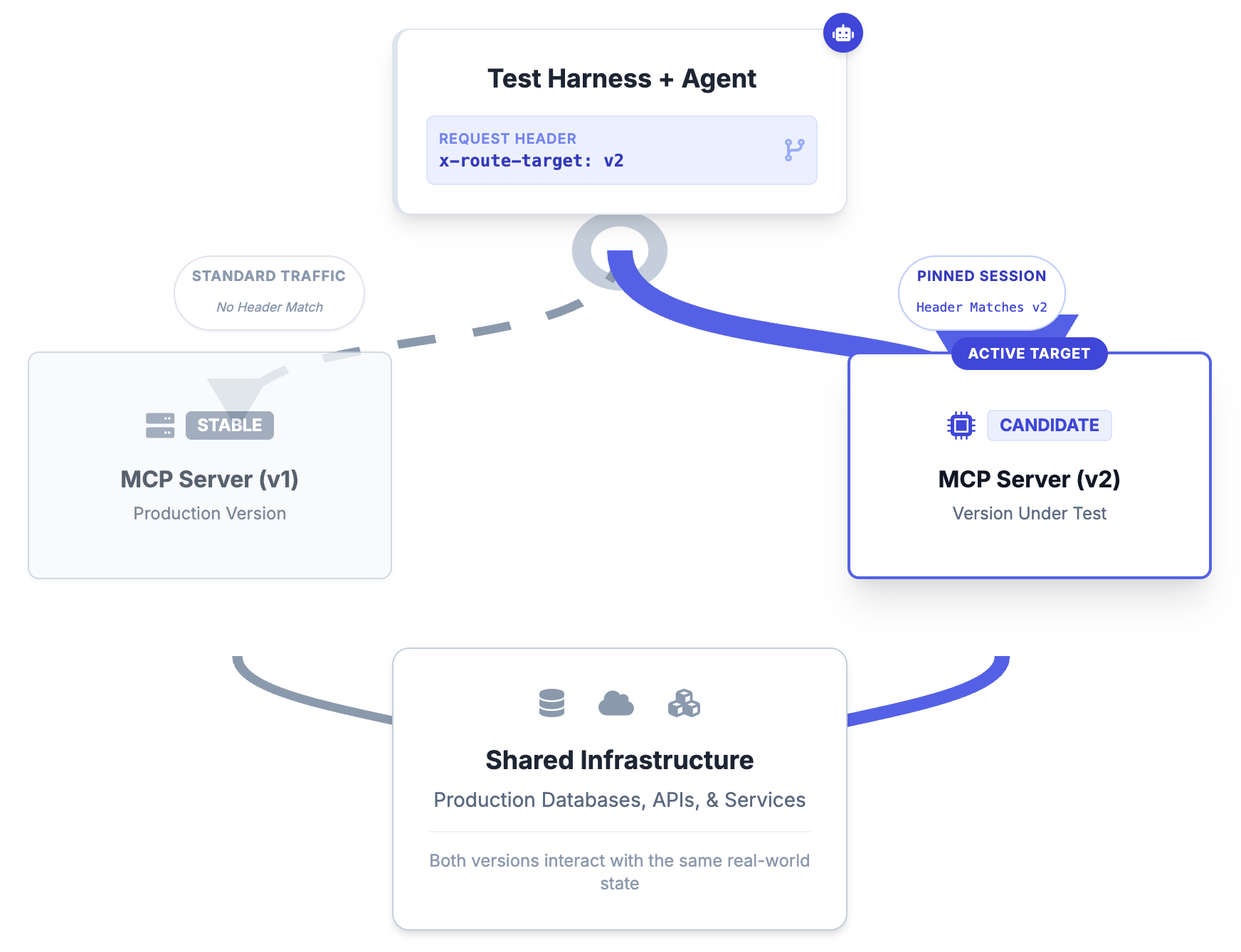

To validate an MCP server without the overhead of full environment replication, we treat the test run as a logical slice within a shared cluster. This life cycle relies on header based routing and session affinity:

- Handshake and routing: The test harness initializes the agent with specific context metadata (such as a baggage header or a custom routing parameter) during the WebSocket or transport handshake. This signals the ingress controller or service mesh to route the persistent JSON-RPC session specifically to the candidate MCP server (the version under test), bypassing the stable production traffic.

- Session isolation: Once connected, the agent operates within a strictly isolated session. While the underlying compute resources may be shared, the logical control flow is pinned to the candidate artifact. This ensures that the nondeterministic reasoning of the agent is exercising only the new code paths.

- Shared downstream state: The candidate MCP server processes the agent’s intent but executes side effects against shared downstream dependencies such as staging databases or stable microservices. This eliminates the need for mocks, allowing the agent to interact with a realistic “world model” where API contracts and data schemas are genuine.

This architecture enables safe end-to-end semantic testing. The harness prompts the agent to perform an operation and verifies the state change against downstream microservices.

Isolation at the connection layer turns the test run into a private lane on a public highway. This enables full end-to-end validation of the MCP server without saturating testing infrastructure or introducing resource contention in shared staging environments

Treat It Like Critical Infrastructure

Teams that are shipping advanced, customer-facing agents understand that robust MCP servers are critical infrastructure. We must recognize them as complex architectural nodes that directly affect agent reliability.

Model evals are critical but insufficient for production standards. Rigorous integration testing of agents with MCP servers is necessary.

An agent is only as effective as its tools. A fragile MCP server creates a fragile agent. Elevating the MCP server to a fully validated microservice is essential for advancing agent development from internal experiments to products that are ready for production.

Learn more about how to implement this testing workflow for your agents at Signadot.com

Subscribe

Get the latest updates from Signadot

Why the MCP Server Is Now a Critical Microservice

.jpg)

In my previous article on preparing CI/CD pipelines to ship production-ready agents, I argued that we cannot ship agents to production that are driven primarily by non-deterministic models. Instead, they must be built as robust workflows where the large language model (LLM) is introduced strategically at specific steps within a deterministic control flow.

Now we must examine the most critical node in that framework.

The Model Context Protocol (MCP) server facilitates interactions between the probabilistic LLM node and the deterministic microservices workflow. It acts as the translation layer connecting the reasoning engine to external data and tools.

The model is one half of the agent architecture. The MCP server is the other half. While model evaluations validate the reasoning engine, they cannot verify the system as a whole. Validation strategies relying on mocks fail to test the agent as a workflow.

Reliability of the end-to-end workflow is paramount when shipping agents to production. The MCP server is the critical node in this topology, acting as both sensory organ and effector arm. If it transmits ambiguous signals, the agent acts erratically. It hallucinates. It degrades user trust. It causes critical business errors.

The Architectural Shift From Contracts to Semantics

To understand the failure risks, we must examine how the MCP server alters service contracts.

Service-to-service communication is deterministic in standard microservices environments. Service A calls Service B using a strict REST or gRPC contract. The interaction is rigid. It is predictable. It is easily validated.

An agentic workflow inverts this.

The agent is a nondeterministic actor operating on probabilistic logic. It decides when to call a tool based on semantic context provided by the MCP server. The server exposes a world model rather than just an API endpoint.

This makes the MCP server a distinct type of microservice. It is a translation layer converting probabilistic intent into deterministic action. This responsibility manifests in three operations requiring rigorous engineering.

1. Defining Capability Boundaries

The MCP server defines agent capabilities through JSON-RPC tool definitions.

If the server exposes a schema with vague descriptions, the agent cannot formulate a valid execution plan. A human developer might read documentation to clarify an API field, but the agent relies solely on metadata exposed by the list_tools capability.

Consider a payment operations agent handling refunds. A fragile MCP implementation might expose a tool named refund_user to process a refund.

This lacks semantic density. The model does not know whether this applies to a full or partial refund or if it handles tax calculation. It is a black box.

A robust implementation defines the boundary with precision. It exposes process_prorated_subscription_refund. The description explicitly states that it calculates the remaining balance for the current billing cycle and issues a credit.

The reasoning chain breaks without this specificity.

2. Governing the Context Economy

The MCP server governs the context window. It must retrieve backend data and format it for LLM consumption.

This data engineering challenge requires differentiating between signal and noise.

Providing a raw 5 MB JSON dump dilutes agent attention. It wastes tokens and increases latency. Conversely, providing too little data causes the agent to hallucinate missing details.

The server must act as a transformation layer that optimizes raw data into context-ready snippets.

3. Executing Side Effects

The MCP server executes actions for the agent. When an agent triggers a deployment, the server is the execution mechanism.

A confused agent can trigger destructive loops if the server lacks idempotency or error handling. The server must implement safeguards preventing the model from erroneously retrying state-changing operations.

The Engineering Rigor Required for Production

Shipping agents to production requires due diligence exceeding standard microservice development. This is most visible in return state ambiguity.

A traditional API might return a 404 error code, which a client handles with logic. An MCP server faces a more complex challenge. It must return a natural language description or structured tool result explaining why the action failed.

If the server returns a generic stack trace, the agent may retry endlessly or invent a plausible but incorrect reason for failure. The error message becomes part of the prompt for the next conversation turn. It must be engineered as carefully as the system prompt.

Latency is also critical. Agents operate in a sequential thought loop. They reason. They call a tool. They wait. They reason again.

A slow server breaks the cognitive chain. High latency causes context timeouts, forcing the agent to abandon workflows. This leaves systems in inconsistent states.

Scaling Testing via Multitenancy

The nondeterministic nature of the client makes testing difficult. Traditional unit tests are insufficient.

Unit testing a Python function to ensure valid JSON output does not prove that an agent will understand how to use it. Mocks are equally ineffective. They decouple the test from real system behavior and create false confidence.

The only way to validate an MCP server is through rigorous end-to-end testing against real dependencies. However, spinning up full cluster replicas for every test is rarely feasible.

To validate an MCP server without the overhead of full environment replication, we treat the test run as a logical slice within a shared cluster. This life cycle relies on header based routing and session affinity:

- Handshake and routing: The test harness initializes the agent with specific context metadata (such as a baggage header or a custom routing parameter) during the WebSocket or transport handshake. This signals the ingress controller or service mesh to route the persistent JSON-RPC session specifically to the candidate MCP server (the version under test), bypassing the stable production traffic.

- Session isolation: Once connected, the agent operates within a strictly isolated session. While the underlying compute resources may be shared, the logical control flow is pinned to the candidate artifact. This ensures that the nondeterministic reasoning of the agent is exercising only the new code paths.

- Shared downstream state: The candidate MCP server processes the agent’s intent but executes side effects against shared downstream dependencies such as staging databases or stable microservices. This eliminates the need for mocks, allowing the agent to interact with a realistic “world model” where API contracts and data schemas are genuine.

This architecture enables safe end-to-end semantic testing. The harness prompts the agent to perform an operation and verifies the state change against downstream microservices.

Isolation at the connection layer turns the test run into a private lane on a public highway. This enables full end-to-end validation of the MCP server without saturating testing infrastructure or introducing resource contention in shared staging environments

Treat It Like Critical Infrastructure

Teams that are shipping advanced, customer-facing agents understand that robust MCP servers are critical infrastructure. We must recognize them as complex architectural nodes that directly affect agent reliability.

Model evals are critical but insufficient for production standards. Rigorous integration testing of agents with MCP servers is necessary.

An agent is only as effective as its tools. A fragile MCP server creates a fragile agent. Elevating the MCP server to a fully validated microservice is essential for advancing agent development from internal experiments to products that are ready for production.

Learn more about how to implement this testing workflow for your agents at Signadot.com

Subscribe

Get the latest updates from Signadot