Namespace based environments for testing: Pros and Cons

Kubernetes namespaces are a powerful tool for creating isolated environments within a Kubernetes cluster. A logical unit within Kubernetes, they allow you to create grouping of resources within a single cluster that can be logically separated, which can help you manage resources and access permissions more easily. This grouping can run within the larger Kubernetes cluster and can include its own set of resources, such as pods, services, and volumes.

Setting up a namespace is generally straightforward and can be done using a simple YAML file. To create a namespace, first define the namespace in a YAML file, specifying the name and any other relevant metadata. You can then apply the YAML file to your Kubernetes cluster using the kubectl apply -f namespace.yaml command. Once the namespace is created, you can deploy your application to the namespace using the kubectl apply command, specifying the namespace using the --namespace flag.

While namespaces have multiple possible uses, this piece will consider their potential for functional testing, in general letting engineers experiment with their code in a production-like environment.

The appeal of namespaces for testing and experimentation is significant: after all they’re easy to set up, and core to the Kubernetes spec, meaning you’re not relying on obscure add ons. Their greatest advantage is an easily viewable and controllable grouping of resources (i.e pods, services, deployments etc). Namespaces provide great isolation at the resource level. All your kubectl commands can be flagged to your namespace, or set there as a default, which means you can safely make changes without worrying about wrecking another team’s services. The Operations team still has to maintain the cluster as a whole, but this is less than the cost of standing up a whole new cluster for each team.

Each new service has a DNS entry, of the form <service-name>.<namespace-name>.svc.cluster.local. So if you know a service name and its namespace, you can use DNS lookup to access it even if it’s not in your namespace.

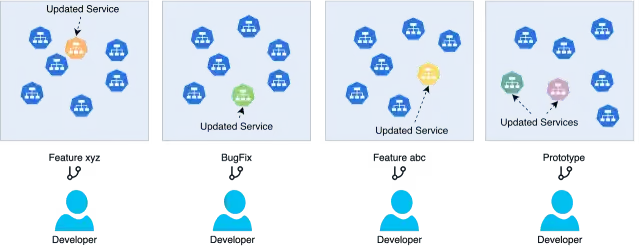

An illustration of developers trying commits to their own namespace.

Stating our goals: More accurate previews and tests

The overarching goal of offering a namespace to a team is to get them to test with greater accuracy. Using a namespace doesn’t directly imply an exact architecture for development and testing. Typically, a team will spin up all the services and resources you need for testing in a namespace. To save costs, it’s also possible to spin up a subset of all services/resources and mock the rest.

Operations Workload

I mentioned above that having multiple namespaces is simpler than running several clusters for different teams, and this is true but it picks an extreme example: I’m not aware of anyone who’s standing up half a dozen separate Kubernetes clusters just for testing (if I’m wrong about this please join our community Slack and let me know!). Considered on its own the labor of separate namespacing is significant.

If you create namespaces for two separate large teams in your org, e.g. team-jacob and team-edward, there’s no inbuilt function to sync these namespaces. That means that changes to datastores, third party API’s, or just updates to unrelated services in the namespace will all have to be synced manually.

It also worth mentioning here that cost maintaining multiple namespaces in terms of storage and computation is almost similar to maintaining separate clusters.

How Large Teams Evolve away from Namespaces

I recently had the chance to speak with an enterprise-level operations team that spent several years using namespaces extensively. They were initially pleased to be able to give teams free reign within their namespace, and get more accurate testing. However the spin up time of a namespace was a core challenge. As the complexity of the app increased, it took progressively longer to spin up all the services/resources, and, worse, eventually the needs of different teams caused namespaces to diverge. There was also no simple indicator for developers that their namespace was up-to-date, so testing results weren’t reliable indicators that something would work when pushed to staging.

Further, while product teams might be clearly delineated, Operations teams aren’t. This meant that individual namespaces didn’t necessarily have an owner on the Operations or Developer Experience team, so no one was answerable when a namespace was out-of-date.

The final result was multiple namespaces that weren’t being actively maintained and couldn’t be relied on. Over time, fewer and fewer devs were using their separated namespace and they would just use feature flags and test on staging.

Compromises to an environments fidelity: mocks and emulation

With the high maintenance cost of keeping every dependency updated in a sandbox namespace, any agile team will look for solutions that require less ongoing upkeep. Instead of constantly updating a namespace to connect to new versions of a third party API, why not mock those API responses, after all the response format should shift much more slowly. Instead of keeping a bunch of unrelated services available to this namespace, why not emulate them with a localstack-like tool? While all these tools help with maintenance needs, they do so at a clear cost. The resulting stack is more stable, but it no longer resembles prod, and the risk increases that things will fail between development, staging, and production. If we look back at the goals stated above, test fidelity was the goal, and really if we want to start mocking other stack components, we’ll eventually get to a sandbox environment so small we might as well mock everything and run it on our laptop.

Where do deploy-time bugs come from? Data and Dependencies matter

The appeal of namespace-based testing is that your compute and other services can easily exist on a cluster with others, letting you test and experiment in your real Kubernetes environment. The drawback comes down to difficulty in replicating data and dependencies. With so many namespaces, each namespace needs to have it’s own datastore instance (to be isolated from other namespaces) and this gets complicated. And note that these datastores can be managed cloud services or be within Kubernetes (e.g dockerized mysql). In either case, the challenge is to have a system that can now spin up temporary versions of these databases along with seed data needed. This is quite a bit of additional work that is needed. and they won’t ‘get’ you anything special when you want to use third-party dependencies. And it’s the interaction with these two categories: data and dependencies, that are so often the source of deploy-time errors.

Who hasn’t had their code fail in production because it turns out that only the production database contains little Bobby tables?

Conclusions

While namespaces absolutely work well for simple apps with only a few services, or development teams with dozens of engineers, and can work in very large teams if actively maintained, this approach to testing often won’t handle an extreme change in scale, and if you don’t keep testing namespaces maintained it’s a vicious cycle of more and more sync problems, with developers finding bugs and circumventing this stage.

In a discussion on a private Slack (quoted with permission), Mason Jones has this to say bout using namespaces for development and testing:

At my previous company as we scaled up we used a shared dev/test cluster and built our own tooling to provide on-demand namespaces. A namespace isn't a "dedicated instance of infra", it's just a logical space in which devs can deploy their own version of something. We often used it with one namespace per team. They only deployed the containers they were changing, and used the shared instances of everything else, so it was actually quite economical. […] there are "hidden" complications to making this work, no question. We customized our service mesh so that requests to another service would first try to target a version of the service in the same namespace, and if there wasn't one then the request would go to the shared namespace. That allowed devs to run a custom version of a service privately, while others could use the shared one.

At Lyft, Razorpay, and DoorDash, large teams use a routing approach to let individual developers run services under test, either in production or in a high-fidelity staging environment. Later articles in this series will discuss alternative approaches to using namespaces for testing in Kubernetes. If you enjoyed this piece and want to talk about high-fidelity tests on Kubernetes at scale, or if you hated the article and want to tell Nica about it, join the Signadot slack and talk directly with the team!

Subscribe

Get the latest updates from Signadot