Why 90% of Microservices Still Ship Like Monoliths

If your team can't push a single microservice change to production independently, you don't actually have microservices.

The promise of microservices is tantalizing — independent deployments, team autonomy and rapid releases. Yet after working with hundreds of engineering teams, I’ve observed a striking pattern: Many growing organizations have microservice architectures but monolithic release processes.

Here’s the uncomfortable truth: If your team can’t push a single microservice change to production independently, you don’t actually have microservices. You have a distributed monolith with extra complexity.

The Batching Problem No One Talks About

The scenario is painfully familiar. Engineers develop code in isolation, run some local tests against mocks, submit a pull request and get it approved. The PR is merged to the main branch, where it joins dozens of other changes waiting to be deployed.

Then the waiting game begins.

These changes accumulate until someone triggers a deployment to a shared environment — usually integration or staging. Comprehensive test suites run, often taking hours. Inevitably, something breaks. But which change broke it? Was it your PR from three days ago? Someone else’s? An interaction between multiple changes?

This murder mystery consumes hours or days of debugging. Engineers context-switch back to code they wrote days ago, fix issues and restart the process. This cycle repeats across multiple environments until, eventually, changes reach production — often weeks after they were written.

Why does every team end up here? Two main constraints drive this batching behavior:

- Cost and time: Integration test suites are expensive and slow. Running full tests for every PR across 100+ developers could cost $500,000+ monthly in CI time alone.

- Environment scarcity: Most teams have two to three shared environments serving dozens of developers. There simply aren’t enough environments for isolated testing of individual changes.

It’s like driving a race car with the parking brake on. You’ve invested in all this power and capability, only to have it hampered by seemingly unavoidable constraints.

The Real Cost of Batched Releases

This batched approach to testing and release carries enormous hidden costs:

- Extended lead time: Changes take days or weeks to reach production, destroying the agility promised by microservices.

- Context switching tax: Engineers forced to revisit old code suffer productivity losses of 20% to 40% with each switch.

- Debugging complexity: Finding the root cause in a batch of 50+ changes is exponentially harder than in a single PR.

- Decreased quality: With less ownership over the release process, engineers invest less in test quality and automation.

- Loss of independence: Teams become tightly coupled by release schedules, negating a core benefit of microservices.

Worst of all, this approach creates a vicious cycle. As release quality decreases, organizations add more environments and more testing phases, further slowing the process.

Breaking Free: Testing Individual Changes

The obvious solution to batch testing is simple: Test every code change individually. Many teams already attempt this using mocked dependencies for contract testing and integration validation.

Mocks work well for testing negative cases and edge conditions, but they create two critical problems: constant maintenance overhead and low-fidelity feedback. Every service change requires updating dozens of mocks across codebases. More critically, mocks miss timing issues, network failures and the subtle behavioral differences that emerge in real service interactions.

So the question becomes: Is it possible to test every code change individually against real environments without prohibitive cost?

The Sandbox Solution

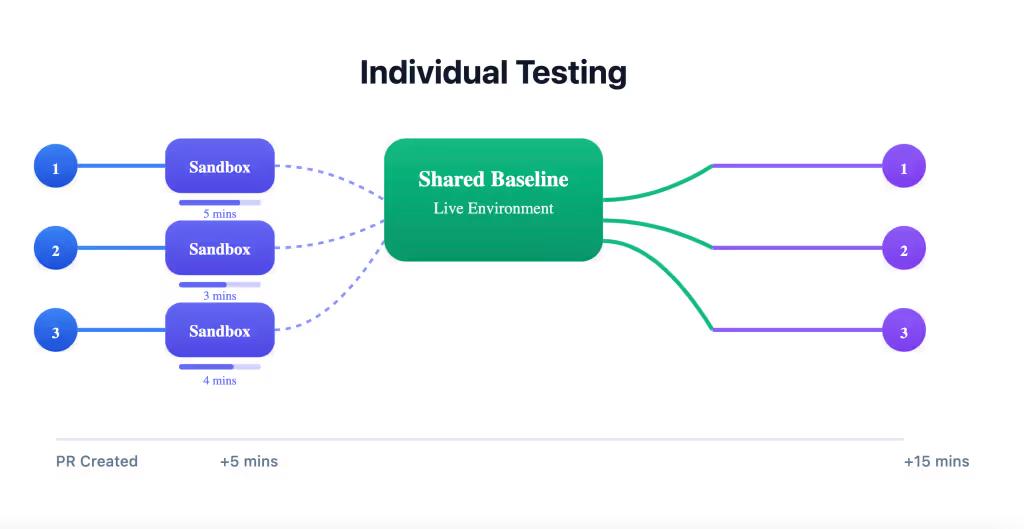

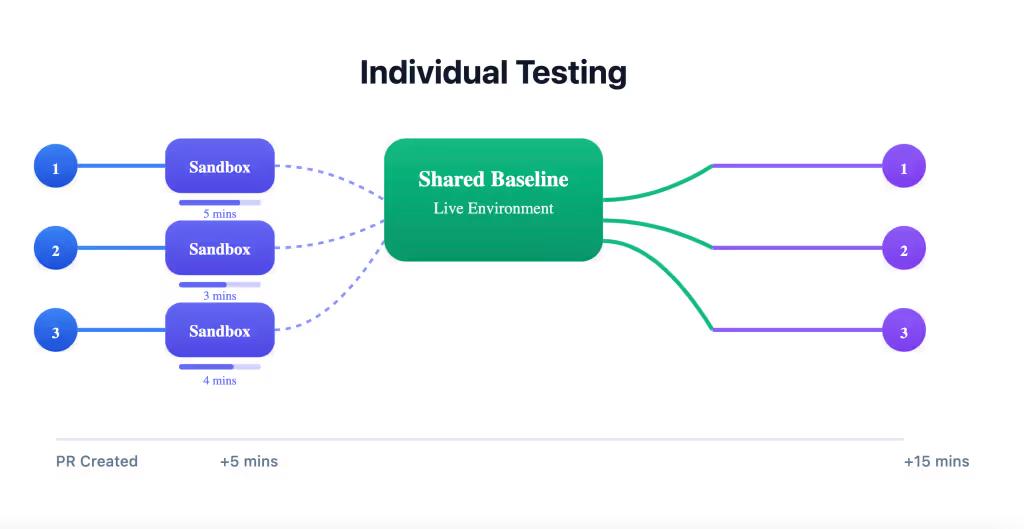

Sandbox environments transform this equation by providing lightweight isolation that leverages shared, live infrastructure. Instead of choosing between expensive full environment duplication or unrealistic mocks, sandboxes offer a third path.

Here’s the breakthrough: When you modify Service A, your sandbox spins up only Service A while routing requests to the current, live versions of Services B, C and D. This enables comprehensive testing against the latest reality of your system, not stale mocks or snapshots.

The workflow transforms:

- An engineer submits a PR.

- A sandbox spins up automatically with only modified services.

- Multiple test types run against real dependencies in minutes — contract tests, integration tests, performance tests, security scans.

- Engineers fix issues while code is fresh.

- Only after validation does the PR merge.

The key insight: Sandboxes become a platform for shift-left testing. Instead of batching different test types into separate phases, you run relevant tests for each code change against real dependencies. No more staging bottlenecks. No more debugging failures across dozens of batched changes.

A FinTech team reduced their time-to-production from nine days to under two hours using this approach. Debugging time dropped to near zero because each failure traces directly to a specific, isolated code change.

Breaking the Batch Habit

The technical barriers to individual change testing have fallen. Modern tooling like Signadot provides sandbox environments without the need to duplicate infrastructure or build complex isolation systems in-house, saving teams millions in development costs and months of engineering effort.

Microservices promised us independence, but most teams remain shackled to batch releases by organizational habits, not technical limitations. The teams making this shift are shipping faster, with higher quality and happier engineers.

The path forward is clear. Will your team continue doing microservices wrong — or start doing them right?

Related Reading

Subscribe

Get the latest updates from Signadot

Why 90% of Microservices Still Ship Like Monoliths

If your team can't push a single microservice change to production independently, you don't actually have microservices.

The promise of microservices is tantalizing — independent deployments, team autonomy and rapid releases. Yet after working with hundreds of engineering teams, I’ve observed a striking pattern: Many growing organizations have microservice architectures but monolithic release processes.

Here’s the uncomfortable truth: If your team can’t push a single microservice change to production independently, you don’t actually have microservices. You have a distributed monolith with extra complexity.

The Batching Problem No One Talks About

The scenario is painfully familiar. Engineers develop code in isolation, run some local tests against mocks, submit a pull request and get it approved. The PR is merged to the main branch, where it joins dozens of other changes waiting to be deployed.

Then the waiting game begins.

These changes accumulate until someone triggers a deployment to a shared environment — usually integration or staging. Comprehensive test suites run, often taking hours. Inevitably, something breaks. But which change broke it? Was it your PR from three days ago? Someone else’s? An interaction between multiple changes?

This murder mystery consumes hours or days of debugging. Engineers context-switch back to code they wrote days ago, fix issues and restart the process. This cycle repeats across multiple environments until, eventually, changes reach production — often weeks after they were written.

Why does every team end up here? Two main constraints drive this batching behavior:

- Cost and time: Integration test suites are expensive and slow. Running full tests for every PR across 100+ developers could cost $500,000+ monthly in CI time alone.

- Environment scarcity: Most teams have two to three shared environments serving dozens of developers. There simply aren’t enough environments for isolated testing of individual changes.

It’s like driving a race car with the parking brake on. You’ve invested in all this power and capability, only to have it hampered by seemingly unavoidable constraints.

The Real Cost of Batched Releases

This batched approach to testing and release carries enormous hidden costs:

- Extended lead time: Changes take days or weeks to reach production, destroying the agility promised by microservices.

- Context switching tax: Engineers forced to revisit old code suffer productivity losses of 20% to 40% with each switch.

- Debugging complexity: Finding the root cause in a batch of 50+ changes is exponentially harder than in a single PR.

- Decreased quality: With less ownership over the release process, engineers invest less in test quality and automation.

- Loss of independence: Teams become tightly coupled by release schedules, negating a core benefit of microservices.

Worst of all, this approach creates a vicious cycle. As release quality decreases, organizations add more environments and more testing phases, further slowing the process.

Breaking Free: Testing Individual Changes

The obvious solution to batch testing is simple: Test every code change individually. Many teams already attempt this using mocked dependencies for contract testing and integration validation.

Mocks work well for testing negative cases and edge conditions, but they create two critical problems: constant maintenance overhead and low-fidelity feedback. Every service change requires updating dozens of mocks across codebases. More critically, mocks miss timing issues, network failures and the subtle behavioral differences that emerge in real service interactions.

So the question becomes: Is it possible to test every code change individually against real environments without prohibitive cost?

The Sandbox Solution

Sandbox environments transform this equation by providing lightweight isolation that leverages shared, live infrastructure. Instead of choosing between expensive full environment duplication or unrealistic mocks, sandboxes offer a third path.

Here’s the breakthrough: When you modify Service A, your sandbox spins up only Service A while routing requests to the current, live versions of Services B, C and D. This enables comprehensive testing against the latest reality of your system, not stale mocks or snapshots.

The workflow transforms:

- An engineer submits a PR.

- A sandbox spins up automatically with only modified services.

- Multiple test types run against real dependencies in minutes — contract tests, integration tests, performance tests, security scans.

- Engineers fix issues while code is fresh.

- Only after validation does the PR merge.

The key insight: Sandboxes become a platform for shift-left testing. Instead of batching different test types into separate phases, you run relevant tests for each code change against real dependencies. No more staging bottlenecks. No more debugging failures across dozens of batched changes.

A FinTech team reduced their time-to-production from nine days to under two hours using this approach. Debugging time dropped to near zero because each failure traces directly to a specific, isolated code change.

Breaking the Batch Habit

The technical barriers to individual change testing have fallen. Modern tooling like Signadot provides sandbox environments without the need to duplicate infrastructure or build complex isolation systems in-house, saving teams millions in development costs and months of engineering effort.

Microservices promised us independence, but most teams remain shackled to batch releases by organizational habits, not technical limitations. The teams making this shift are shipping faster, with higher quality and happier engineers.

The path forward is clear. Will your team continue doing microservices wrong — or start doing them right?

Related Reading

Subscribe

Get the latest updates from Signadot