Agentic Coding Tools Are Accelerating Output, Not Velocity

.jpg)

Image by W.S. Coda from Unsplash.

We are at a pivotal moment in software development. In just a few years, LLMs have gone from impressive chatbots to full-blown coding agents built directly into your IDE.

This has the entire industry racing to accelerate developer velocity with AI. With 84% of developers now using AI tools, and companies like Google stating that 25% or more of their code is now generated by AI, the mandate is clear.

So far, this hasn’t translated into significantly more code being generated, but that is already changing. Models and tooling are evolving rapidly, with agents now able to work in parallel on longer coding tasks

Right now, this workflow is still maturing. We are in a transition phase where tools are helpful but often require heavy verification, limiting the actual output of code that makes it to PR. But as the tools continue to get better, the per-developer output of code is only going to increase.

And that is the goal. Teams are adopting these tools now with the expectation that they will continue to improve developer velocity, ultimately enabling the same teams to write 5x or 10x more code.

But the future state where that goal is achieved presents a critical challenge. The resulting explosion in code output will inevitably make existing CI/CD bottlenecks worse in direct proportion to the coding efficiencies gained.

The Invisible Queue

Picture the emerging workflow. A developer runs three Cursor sessions at once. One refactors the auth service. Another adds a feature to the notification system. A third optimizes database queries. By the end of the day she has three PRs ready to publish. Multiply that by a hundred developers and you get a sense of the scale of code that will be hitting legacy CI/CD pipelines.

Without modernizing validation infrastructure in parallel to developer tooling, the acceleration in code generation will never translate to an equal acceleration in productivity. Instead, it will create a merge crisis. All those PRs will continue to pile up at the exact same chokepoint: validation.

Code review becomes the new bottleneck. Even with AI-assisted reviews, humans still need to sign off. If teams achieve their goal of 5x developer output, that translates to hundreds of PRs a week needing eyeballs, testing, and approval. The queue grows. PRs age. Conflicts multiply.

But the real chaos happens post-merge. All that code lands in staging and staging breaks, because nobody could actually test how their microservices changes interact until they're all deployed together. Now 100 developers are blocked, staring at a broken staging environment, waiting for someone to untangle the mess.

The Only Way Forward

There's only one solution, and it's counterintuitive: test more, but earlier.

Not unit tests. Not mocks. Real integration tests. End-to-end tests. Running against actual services. But here's the key: run them at the granularity of every code change, during local development, before the PR even exists.

Think about it differently. Right now, that Cursor session is generating code. It writes a new API endpoint, updates three service calls, modifies a database schema. The developer reviews the code, thinks it looks good, publishes the PR. Then the waiting starts. Code review queue. CI pipeline. Manual testing in staging. Maybe it works. Maybe it doesn't. Either way, you find out hours or days later.

What if instead, while Cursor is writing that code, it's also spinning up a lightweight test environment, deploying those changes, running integration tests, and validating everything works, all before the developer even switches back to that tab?

That's not science fiction. That's how development needs to work in the agentic era.

Shifting Left, For Real This Time

We've been talking about "shift left" for years. This time it's not optional. It's critical for survival.

The paradigm: every unit of code produced by an AI agent gets tested end-to-end during local development. Not after merge. Not during the PR review. While being written.

When this works, something magical happens. The PR that lands for review isn't a leap of faith. It's already validated. The reviewer sees: "15 integration tests passed. 3 end-to-end flows validated. Contract tests clean." Code review becomes fast. Confidence is high. Merges happen quickly. Staging stays green.

This is where Signadot comes in.

Making It Real

The solution isn't better code review tools or faster CI pipelines. It's parallelizing validation itself.

Parallelize Validation

To unblock the queue, we must enable full integration testing during local development.

Signadot allows developers to use the existing staging environment as a shared baseline. By isolating requests rather than duplicating infrastructure, every developer gets a sandboxed virtual private staging environment.

This allows 100+ developers to test in parallel on the same cluster without impacting each other. No queue. No contention. No waiting for staging to be free.

Agent-Native Infrastructure via MCP

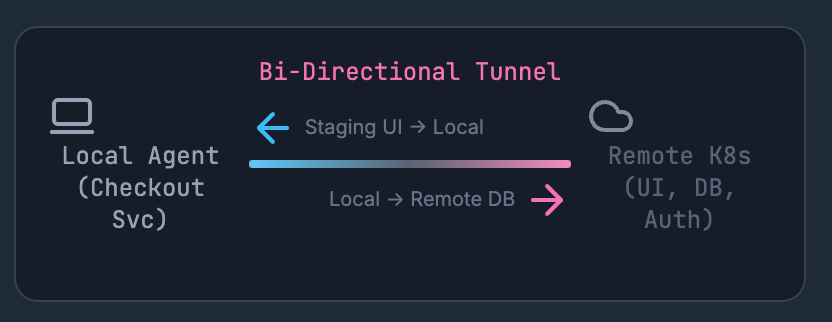

Signadot integrates directly into the AI coding workflow via the Signadot MCP (Model Context Protocol) Server.

Tools like Cursor and Claude Code can now "speak" infrastructure. They use plain English commands to instantiate sandboxes, configure routing, and manage resources directly from the agent interface.

The agent doesn't need you to switch contexts. It handles infrastructure the same way it handles code.

Bi-Directional Tunneling

Once the sandbox is requested, Signadot establishes a secure, bi-directional tunnel between the agent's local environment and the remote Kubernetes cluster.

Requests triggered from the staging UI in the cluster are routed to your local machine. Simultaneously, your locally running service directly hits remote dependencies—database, auth, other microservices—in the cluster. You're testing against the real stack, not mocks.

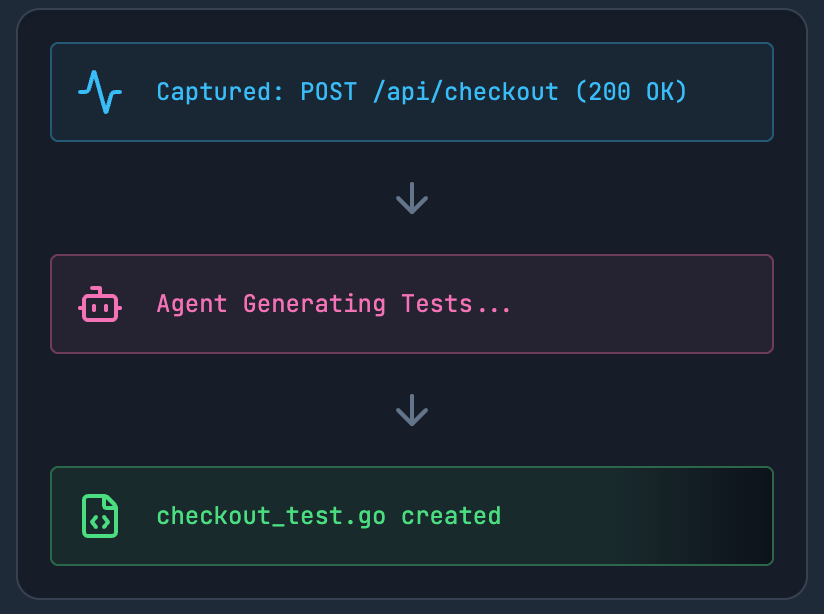

AI-Driven Test Generation via Traffic Capture

As you interact with your local service, Signadot captures the actual request/response traffic flows. The coding agent analyzes this captured traffic to automatically generate functional API tests, ensuring your changes don't break existing contracts.

No manual test writing. The agent sees how your service actually behaves and writes tests that validate those behaviors.

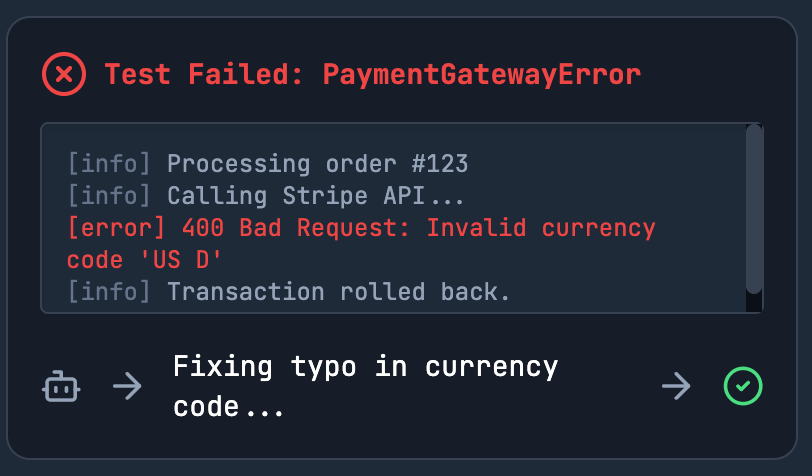

Autonomous Debugging Loop

Test failed? The agent reads the live logs streamed directly from the Signadot sandbox to pinpoint the error. It corrects the code and reruns the tests instantly in the same sandbox.

This closes the feedback loop without human intervention. The agent iterates until tests pass, all during local development.

When you publish the PR, you attach the sandbox link. Your reviewer sees exactly what was tested, how it performed, and what edge cases were validated. The PR goes from "needs investigation" to "LGTM" in minutes.

The Real Unlock

Agentic coding tools promise 10x code generation and companies are racing to achieve that goal. But without granular pre-merge testing, we will remain stuck with 1x shipping velocity.

The companies that will win are not the ones generating the most code. They are the ones who can validate and ship that code as fast as it is written. That means testing at the same granularity as coding. Every change. Every branch. Every experiment.

The bottleneck is moving. The question is whether your testing infrastructure is moving with it.

At Signadot, we're building the platform that makes agentic coding actually productive. Because code in a PR queue isn't value. Code in production is.

Subscribe

Get the latest updates from Signadot